June 20, 2016

By: Michael Feldman

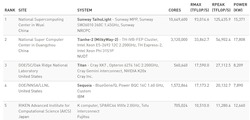

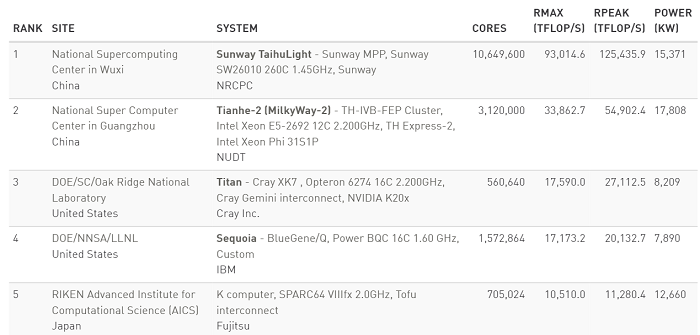

US supercomputing was dealt a couple of blows on Monday after the latest rankings of the 500 fastest supercomputers in the world were announced during the opening to the International Supercomputing Conference (ISC). In the updated TOP500 list, China retained its leadership at the top with a new number one system, while also overtaking the United States in the number of total systems and aggregate performance. This is first time in the list’s history that the US did not dominate the TOP500 results in these latter two categories.

The new top supercomputer, known as Sunway TaihuLight, achieved a world-leading 93 petaflops on the Linpack benchmark (out of 125 peak petaflops). The system, which is almost exclusively based technology developed in China, is installed at the National Supercomputing Center in Wuxi, and represents more performance than the next five most powerful systems on the list combined. TaihuLight beats out its older cousin, Tianhe-2, a 33-petaflop machine running at the National Super Computer Center in Guangzhou, and which was the top-ranked supercomputer since June 2013. For a deeper look at TaihuLight, see our extended coverage here.

Besides the being tops in performance, China now leads in sheer numbers well. Although the country took the system lead away from the US, the two superpowers are on nearly the same footing in this regard: China has 167 systems, the US has 165. But considering that just 10 years ago, China claimed a mere 28 systems on the list, with none ranked in the top 30, the nation has come further and faster than any other country in the history of supercomputing.

Everyone other country is much further back. In the latest rankings, Japan has 29 systems, followed by Germany with 26, France with 18, and the UK with 12. No other nation claims more than 10 systems on the list.

It should be noted that the surge in Chinese systems was achieved with the help of number of generically named Internet service providers and a number of anonymous commercial firms that ran Linpack on their in-house clusters. It’s likely that a good number of these systems don’t actually run what would be considered HPC workloads during their day jobs, so their presence on the list has probably inflated China’s representation to some degree. For what it’s worth though, the US and other countries also claim a number of these questionable HPC machines, so it’s difficult to make objective comparisons.

With this latest list, the aggregate performance of all 500 machines is more than half an exaflop, 566.7 petaflops to be exact. Of that total, the number one and two machines from China represent 126.9 petaflops, or nearly a quarter of the total. As a result, China captured the TOP500 performance lead from the US for the first time.

Vendor share in supercomputing reflects the usual suspects: Cray leads in installed performance, with about 20 percent of total; Hewlett Packard Enterprise is tops in total number of systems, with 127; and Intel totally dominates other chipmakers in systems using their processors, with 455. The majority of accelerator-equipped systems are powered by NVIDIA GPUs (67 systems) and Intel Xeon Phi processors (26 systems), but the total number of accelerated supercomputers declined from 104 to 93 since the last list in November 2015.

Despite all the new Chinese systems, turnover on the list continues to be sluggish. The top 10 list is unchanged, save for the new number one, which bumped all the other systems down a notch. The rest of the list followed that general trend, resulting in a slower rate of performance growth that started back in 2008. Prior that that year, aggregate supercomputer performance was increasing at around 90 percent per year; after 2008, it flattened out to 55 percent per year. If TaihuLight hadn’t been submitted this time around, the aggregate performance would have barely budged at all since last November.

There are myriad of theories out there that try to explain the lagging growth rate: Moore’s Law is slowing down (maybe slightly, but not nearly enough to account for the lesser growth); the global recession has killed government spending (that’s essentially over); and there’s less interest in reporting their machines (doubtful, that goes against human nature).

The best hint is that the increase in the size of the systems, that is, the number of server nodes, is on the decline – not in absolute numbers, just the rate of growth. That suggest that users are less willing to pay for larger systems. While Moore’s Law essentially gives you a free ride to more performance, adding extra servers and the network to connect them, not to mention the power to run them, costs real money.

Conventional wisdom says that HPC users have insatiable demand for performance, but they must still quantify that demand with cost/benefit considerations. The slowdown in system size suggests that the cost/benefit ratio is getting larger. Adding accelerators can give you better performance without increasing system size, but the slow rate of adoption indicates that the ancillary costs of moving to accelerators is slowing their uptake.

Finding the trend lines of growth in system size growth is complicated by the fact the top end of the list, made up primarily of capability supercomputers that serve large research communities, are used very differently (and procured very differently) than most of the systems in the remainder of the list, which are smaller machines used to run a defined set of well-worn applications.

Overall though, the slowing growth rate points to a maturation of the HPC user community. That’s not a bad thing in itself, but will lead the users and vendors down a different path if the trend continues. There’s sure to be more talk this week at ISC about how supercomputing growth is evolving. Stay tuned for more coverage on this topic.