Sept. 26, 2017

By: Michael Feldman

Intel Labs has developed a neuromorphic processor that researchers there believe can perform machine learning faster and more efficiently than that of conventional architectures like GPUs or CPUs. The new chip, codenamed Loihi, has been six years in the making.

Loihi is powered by digital versions of neurons and synapses, which are used to perform asynchronous spiking, a type of computation that is analogous to the way our brains work. The current theory is that the biological neural networks inside our heads create pulses corresponding to external stimuli presented to it, and stores those pulses as information by modifying the network connections. Loihi does something similar, with its own virtual neurons and synapses.

Loihi is powered by digital versions of neurons and synapses, which are used to perform asynchronous spiking, a type of computation that is analogous to the way our brains work. The current theory is that the biological neural networks inside our heads create pulses corresponding to external stimuli presented to it, and stores those pulses as information by modifying the network connections. Loihi does something similar, with its own virtual neurons and synapses.

Such a model lends itself to performing machine learning tasks interactively and continuously. Intel Labs director and corporate VP Michael Mayberry, penned an editorial on the new chip and explains the significance of the architecture like this:

“The Loihi test chip offers highly flexible on-chip learning and combines training and inference on a single chip. This allows machines to be autonomous and to adapt in real time instead of waiting for the next update from the cloud. Researchers have demonstrated learning at a rate that is a 1 million times improvement compared with other typical spiking neural nets as measured by total operations to achieve a given accuracy when solving MNIST digit recognition problems. Compared to technologies such as convolutional neural networks and deep learning neural networks, the Loihi test chip uses many fewer resources on the same task.”

He goes on to describe a couple of typical applications where such real-time processing would be advantageous: tracking your heartbeat to detect the emergence of potential problems before they occur, and monitoring cybersecurity systems to detect hacking attempts or other types of system breaches. He notes the technology can also be used for various automotive and industrial applications – basically any application where real-time decision making must be performed, or where training must be a continuous process. And since, according to Mayberry, Loihi can be 1,000 times more energy efficient than a general-purpose computer, it can be embedded in things like person robots or other devices that operate independently of the electric grid.

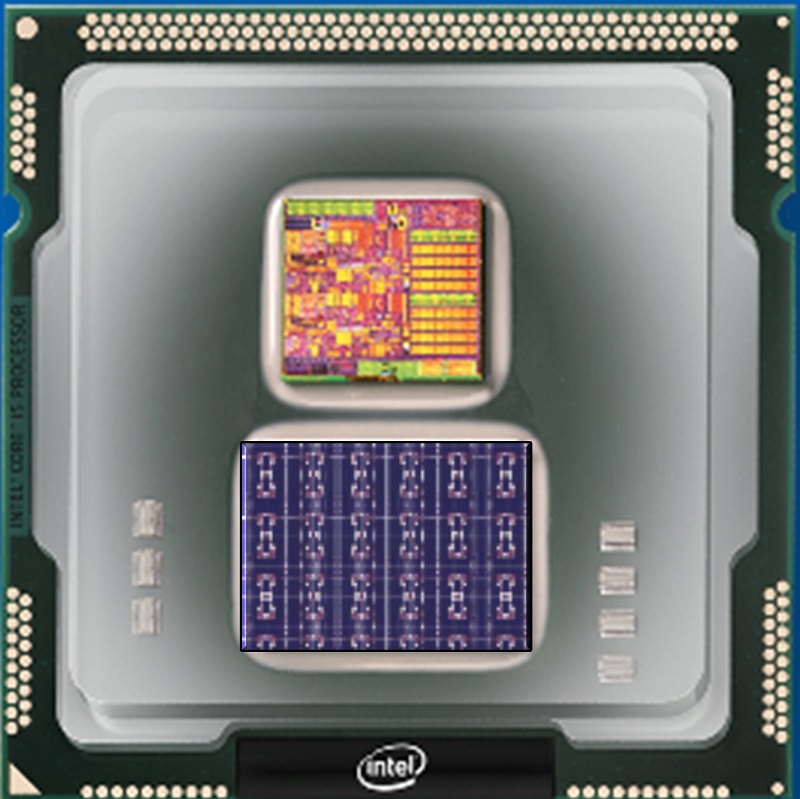

To accomplish this, Loihi has been equipped with 130,000 neurons and 130 million synapses, and is built on Intel’s 14nm process technology. Digging a little deeper, the chip is described as a manycore mesh, with each core outfitted with a “learning engine” suitable for supervised and unsupervised learning. It supports a range of network topologies include sparse, hierarchical and recurrent neural networks.

Intel is not the only one working on neuromorphic silicon. IBM Research has developed TrueNorth, a neuromorphic chip that was introduced in 2014. It has 1 million neurons and 256 million synapses spread across 4,096 cores, and is currently under trial at the Department of Energy’s Lawrence Livermore Laboratory and the US Air Force. It is also being studied by more than 40 universities, government labs, and businesses. The chip has been used for image and speech recognition problems, and according to IBM has exhibited accuracy on par with that of existing platforms that have been tuned for these applications for some time.

Although on paper, TrueNorth contains nearly eight times as many neurons and twice as many synapses as Loihi, that doesn’t guarantee superior capability. The devil will be in the details of how the cores operate under the hood. As of yet, Intel has not released benchmarks of Loihi, although Mayberry writes that they have developed and tested “several algorithms with high algorithmic efficiency for problems including path planning, constraint satisfaction, sparse coding, dictionary learning, and dynamic pattern learning and adaptation.”

How that translates into real-life application performance is unknown, but since Loihi is scheduled to become available to universities and research institutions in the first half of 2018, it will theoretically be possible for these organizations to compare the Intel and IBM neuromorphic chips on various applications.

The bigger question for Intel is if this test chip will result in a commercial offering, and if so, where would it fit into the company’s AI processor portfolio. Currently, Intel is planning to attack this market on multiple fronts, starting with Knight Mill, a Xeon Phi processor tweaked for deep learning. It’s scheduled to launch in Q4 of this year. Lake Crest, a specialized deep learning coprocessor based on its Nervana IP is also under development. It was originally supposed to be released in the first half of this year, but that never happened and its new launch date is unknown. Further down the roadmap is Knights Crest, a Xeon-Nervana hybrid of some sort, which is expected to appear in 2020.

Intel has also positioned its Altera FPGA portfolio for deep learning inferencing and these chips have found a major foothold in Microsoft’s Azure cloud for this application set. The chipmaker also recently launched the Movidius Neural Compute Stick, a USB-based inference platform aimed at host devices at the edge. Even the generic Xeon CPU now has some AI tweaks in its latest Skylake incarnation. All of this reflects Intel’s thinking that AI capability will be ubiquitous across the market it serves, and will have to be supported in all its platforms to one degree or another.

The neuromorphic architecture could well have the biggest upside potential of any of these other architectures, and may end up supplanting GPUs and one or more of Intel’s own AI platforms at some point. Even if commercialization is years away, the eventual size of the AI market compels IT behemoths like Intel and IBM to engage in high-risk R&D. We’ll just have to wait and see how these particular experiments turn out.

Image: Loihi chip. Source: Intel Labs.