Sept. 12, 2017

By: Michael Feldman

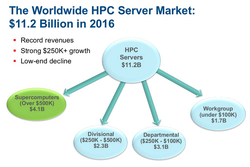

Hyperion Research says 2016 was a banner year for sales of HPC servers. According to the analyst firm, HPC system sales reached $11.2 billion for the year, and is expected to grow more than 6 percent annually over the next five years. But it is the emerging sub-segment of artificial intelligence that will provide the highest growth rates during this period.

Source: Hyperion Research

Source: Hyperion Research

At Hyperion’s recent HPC User Forum in Milwaukee, Wisconsin, the company’s analyst crew recapped the previous year in HPC and offered their five-year projection based on their most recent data. Sticking with servers for the moment, Hyperion predicts the HPC system revenue will reach $14.8 billion in 2021, driven in no small part by the supercomputer segment, that is, systems costing $500,000 or more. That segment is supposed to account for nearly $5.4 billion -- more than a third – of the total server revenue in 2021. Hyperion attributed the high-end growth to exascale investments around the world coming to fruition.

The first exascale systems from China, the US, Japan and Europe are expected to be deployed between 2019 and 2023, which conveniently brackets the 2021 end data of the five-year forecast. Hyperion is keeping tabs on exascale sites individually, since each will deploy systems costing hundreds of millions of dollars apiece. That kind of spending impacts total annual HPC revenue fairly significantly.

Besides HPC server revenue, there is storage, middleware, applications and services, which together accounted for an additional $11.2 billion of spending in 2016 – so $22.4 billion in total. Hyperion is saying that will grow to $30.3 billion in 2021, representing 6.2 percent compounded annual growth rate (CAGR) over that period.

Source: Hyperion Research

Source: Hyperion Research

Hyperion CEO Earl Joseph noted that last year’s good HPC system revenue numbers represented something of rebound from 2015, which he characterized as “soft.” But in 2016, HPC grew well, especially for systems costing more than $250 thousand. “There is still a little bit of flatness happening at the very high end of the market, said Joseph. “But our view of that flatness there is because of a massive bounce coming in the next 18 months.”

As anyone who follows the supercomputing space for any length of time, it quickly becomes apparent that the market is something of a roller-coaster. In particular, large system procurements tend to occur in particular years, when a release of new processors and government funding sync up. In the case of the chip componentry, the new Skylake Xeons, AMD EPYC CPUs, and NVIDIA Volta GPUs were all released into the wild this summer, laying the foundation for the next set of HPC system spending over the next 12 months. There is also a backlog of big government systems in the pipeline, including the pre-exascale US DOE systems: Summit, Sierra, and (maybe) Aurora.

On the system vendor side, Hewlett Packard Enterprise (HPE) has established itself the dominant player, according to Hyperion, with nearly $3.8 billion in HPC server sales in 2016. In second place is Dell, which trails with just over $2.0 billion in revenue. Further back is Lenovo and IBM, at $909 million and $492 million, respectively. Cray nearly caught up with IBM in 2016 with a revenue tally of $461 million. The relatively new hierarchy is the result of IBM’s sale of the its x86 business to Lenovo, which allowed HPE, Dell, and Cray to grab a good chunk of Big Blue’s HPC market share over the last two years.

By vertical, the government lab sector is still number one in system spending with over $2.0 billion in 2016. Next comes academia, which plunked down $1.9 billion for HPC infrastructure last year. Other top spenders include the CAE sector ($1.3 billion), defense ($1.1 billion), and biosciences ($1.0 billion).

Hyperion is also starting to track the adjacent machine learning/AI market, which at least for the time being is being folded under their high performance data analysis (HPDA) sub-segment. Basically, HPDA encompasses the data analytics side of HPC modeling and simulation (essentially, big data HPC), plus what the analyst firm is calling “advanced analytics.”

Big data HPC is the more established of the two HPDA categories and is already using nearly a third of the cycles in the HPC sites that Hyperion tracks. The current approach is to run analytics and simulations on the same machine, but Hyperion senior research VP Steve Conway says the longer-term trend will be to buy separate machines for each, since the applications requirements are so different. Advanced analytics is the new kid on the block, and includes things like data-driven science and engineering, intelligence/security analytics, and knowledge discovery around machine learning, cognitive computing and AI, in general.

What’s interesting here is that advanced analytics includes new HPC use cases like fraud detection, affinity marketing, business intelligence, and precision medicine, all of which have tremendous upside revenue potential. “Business intelligence is the fastest growing HPC use case out of these,” said Conway, “but it’s starting at a smaller base.” Precision medicine isn’t much of a market today, he noted, but given the magnitude of healthcare spending, it will eventually be larger than all the others.

Hyperion is projecting that the HPDA server market will grow from about $1.5 billion in 2015 to around $4.0 billion by 2021, which represents a 17 percent CAGR over this timeframe. When they break out the AI piece, the growth rate is even more impressive. From a $246 million base for AI server spending in 2015, Hyperion thinks it will grow over the next five years to $1.3 billion, representing a CAGR of 29.5 percent. Left unsaid is the realization that if those trends continue, AI will dominate high-end data analytics revenue in a relatively short amount of time.

Source: Hyperion Research

In any case, keeping all of that spending on artificial intelligence infrastructure straight is going to be a challenge for Hyperion, or, for that matter, anyone else tracking the overlapping HPC and AI markets. Many of today’s GPU-equipped supercomputers are already spending some of their cycles on machine learning codes, and upcoming systems like Summit, with its deep learning-optimized V100 GPUs, will certainly be employed to run AI applications. Then there are systems like Japan’s AI Bridging Cloud Infrastructure (ABCI) and TSUBAME 3.0, which were explicitly designed for both HPC simulations and machine learning applications. Which revenue bucket do these systems end up in?

Regardless of how the money counted, the addition of AI, as well as other types of high end of data analytics, promises to propel HPC into new application areas and help reinvigorate a market that might otherwise have suffered from the slow disintegration of Moore’s Law. That means more revenue for HPC vendors, more applications for HPC users, and more work for everybody. For analyst firms like Hyperion, it’s just more work.