Oct. 19, 2017

By: Michael Feldman

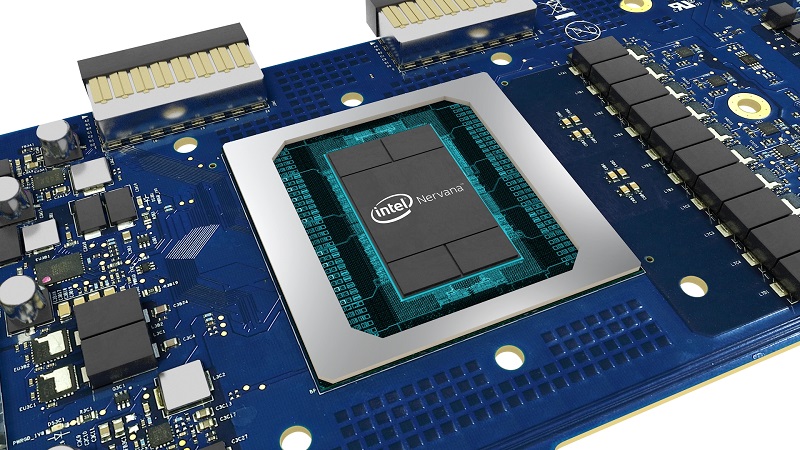

In an editorial posted on Intel’s news site, Intel CEO Brian Krzanich announced they would be releasing the company’s first AI processor before the end of 2017. The new chip, formally codenamed “Lake Crest,” will be officially known as the Nervana Neural Network Processor, or NNP, for short.

Source: Intel

As implied by its name, the chip will use technology from Nervana, an AI startup Intel acquired for more than $350 million last year. Unlike GPUs or FPGAs, NNP is a custom-built coprocessor aimed specifically at deep learning, that is, processing the neural networks upon which these applications are based.

In that sense, Intel’s NNP is much like Google’s Tensor Processing Unit (TPU), a custom-built chip the search giant developed to handle much its own deep learning work. Google is already using the second generation of its TPU, which supposedly delivers 180 teraflops of deep learning performance, and is used for both neural net training and inferencing.

In fact, Intel seems to be aiming the NNP at hyperscale companies and other businesses that don’t have access to Google’s proprietary TPU technology. In particular, Krzanich says Facebook is in “close collaboration” with them on the NNP, which implies they will be Intel’s first customer, or at least its first major one.

Of course, Intel is also looking to dislodge NVIDIA GPUs from their dominating perch in the AI-accelerated datacenter. NVIDIA’s latest offering for this market is the V100 GPU, a chip that can deliver 120 teraflops of deep learning. Microsoft, Tencent, Baidu, and Alibaba have all indicated interest in deploying these NVIDIA accelerators in their respective clouds.

Intel hasn’t revealed peak performance numbers on the NNP (They surely have them, given the processors will be commercially available in less than three months.) When Nervana was its own company, they were claiming their design would deliver a 10x performance improvement compared to the GPUs of the day. But that was last year, before both NVIDIA and Google essentially achieved that performance multiplication on their own with their latest silicon.

To be taken seriously, the NNP needs to exhibit performance in the neighborhood of the V100 and second-generation TPU, and ideally exceed both of them. It also needs to demonstrate that deep learning codes will be able to effectively extract this performance from the NNP chip, and do so with multi-processor setups.

There’s a good chance Intel will be able to achieve this. For one thing, the company has its advanced 14nm process technology at its disposal, something Nervana proabably never factored into its calculations when it was operating independently. Intel has also been rapidly building up its software stack for AI and deep learning. And even if Intel doesn’t have the broad ecosystem enjoyed by GPUs in this arena, what they have should be enough to support initial development and deployments on the new platform.

It's also likely that the Intel engineers who came over from Nervana have been improving on the original design. Naveen Rao, who was Nervana’s CEO and one of its co-founders, and now leads Intel’s AI Products Group, penned his own editorial on the NNP announcement, describing the chip design in some detail. In his write-up, he highlights the processor’s Flexpoint numerical format, its high-performance interconnects, and its software-controlled memory management. While there is no new technology revealed here, the Intel/Nervana engineers have had plenty of time to optimize these features and put the technology on par with that of its competition.

Krzanich notes that they have multiple generations of NNP processors in the pipeline, with the intent to evolve the technology substantially over the next few years. “This puts us on track to exceed the goal we set last year of achieving 100 times greater AI performance by 2020,” he said.

The 2020 reference alludes to the “Knights Crest” processor, a chip they talked about last November, when Intel execs outlined their initial AI hardware roadmap. At that time, they characterized the Knights Crest design as a bootable Xeon CPU with an integrated Nervana-based neural network accelerator on-chip. The NNP that they are about to release is a coprocessor that will rely on a CPU host.

Obviously, Intel has high hopes for this application space, otherwise the company never would have pursued not only a custom chip, but a whole series of them. Krzanich pointed to the IDC estimate that the AI spending will reach $46 billion by 2020, and would span most major industries. He mentions healthcare, social media, automotive, and weather forecasting as four such examples where this technology is poised to make major inroads.

“We are well on our way to unlocking the potential of AI and paving a path to new forms of computing,” Krzanich writes. “It will be amazing to watch the industry transform as our latest research and compute breakthroughs continue to mature.”