Oct. 20, 2017

By: Michael Feldman

Sam Mahalingam, Chief Technical Officer at Altair, thinks much of the value of exascale computing will be provided by machine learning and other data-driven techniques.

In an article published earlier this week on the Department of Energy’s Exascale Computing Project (ECP) website, Mahalingam starts off by reiterating what has been conventional wisdom for some time, namely that exascale computing will be necessary to drive the kind of multiphysics applications being demanded by industrial users. In particular, he’s talking about simulations that integrate solvers that perform CFD, electromagnetics, and structural engineering. Such applications could be used to prototype entire airplanes, ships, or ground vehicles. Not surprisingly, these codes are much more complex than those used to design, for example, an airplane wing or an automobile engine, and therefore require a lot more computational power.

In an article published earlier this week on the Department of Energy’s Exascale Computing Project (ECP) website, Mahalingam starts off by reiterating what has been conventional wisdom for some time, namely that exascale computing will be necessary to drive the kind of multiphysics applications being demanded by industrial users. In particular, he’s talking about simulations that integrate solvers that perform CFD, electromagnetics, and structural engineering. Such applications could be used to prototype entire airplanes, ships, or ground vehicles. Not surprisingly, these codes are much more complex than those used to design, for example, an airplane wing or an automobile engine, and therefore require a lot more computational power.

From the article:

“The need for exascale really becomes extremely important because the size and complexity of the model increases as you do multiphysics simulations,” Mahalingam said. “This is a lot more complex model that allows you to truly understand what the interference and interactions are from one domain to another. In my opinion, exascale is truly going to contribute to capability computing in solving problems we have not solved before, and it’s going to make sure the products are a lot more optimized and introduced to the market a lot faster.”

But Mahalingam also believes that these simulations will become much more powerful if they are retooled as data-driven models. That is, if operational data from real-world use could be captured and then used to guide the simulations, engineers would be able to shrink the design space appreciably and refine the subsequent prototypes in much shorter periods of time. Alternatively, these data-driven techniques could be used to determine part failures and preventive maintenance schedules on existing components.

According to Mahalingam, deep learning and machine learning algorithms will be key to developing these data-driven models, and will free engineers to “think about more complex problems to solve, and in turn come up with more innovative products.” Using the data to develop better models, instead of just using it to validate the models is something of a paradigm shift for HPC developers.

An example of the machine learning approach is a neural network code known as CANDLE (CANcer Distributed Learning Environment), which was developed by the Department of Energy to help guide drug response simulations. In this case, unsupervised learning, based on clinical reports, is used to drive simulations of cancer populations in order to optimize treatments for patients. The CANDLE code, by the way, is going to run on the upcoming pre-exascale supercomputers Summit and Sierra, and will eventually be scaled up to run on full-blown exascale machines.

But as Mahalingam points out, the use of these neural networks to refine traditional simulations, will probably move the emphasis away from brute-force capacity computing and toward a more cognitive paradigm. And not just for manufacturers, but, as in the CANDLE case, for things like life sciences and personalized medicine as well. “This is much bigger than any one company or any one industry,” Mahalingam said. “If you consider any industry, exascale is truly going to have a sizeable impact, and if a country like ours is going to be a leader in industrial design, engineering and manufacturing, we need exascale to keep the innovation edge.”

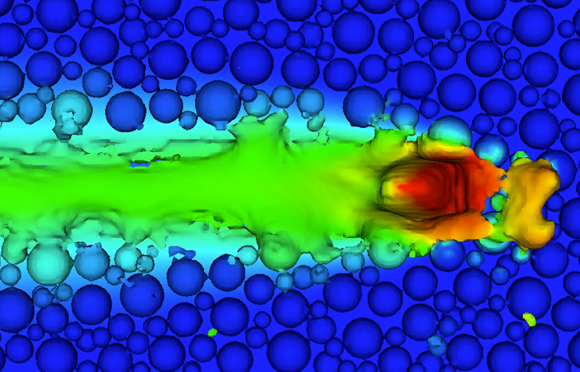

Image: Laser melting metal powder. Source: Oak Ridge National Laboratory