Oct. 27, 2017

By: Michael Feldman

Amazon has created three new EC2 instances based on NVIDIA’s Volta V100 GPU, making them the first public cloud provider to offer the blazingly fast accelerators.

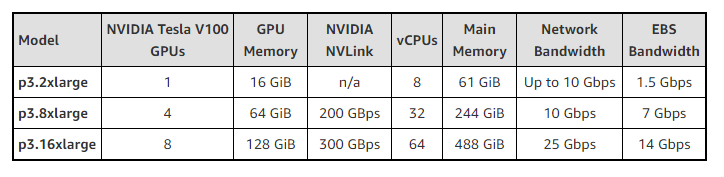

The P3 instances, as they are called, are designed for the kinds of computationally-intensive tasks that GPUs have proven to be so adept at, namely, machine learning and deep learning, computational fluid dynamics, computational finance, seismic analysis, molecular modeling, and genome analysis. The instances come in configurations of one, four, or eight V100s, with each GPU paired with eight Intel Xeon cores. The complete configuration setup is provided in the table below.

Source: Amazon

Source: Amazon

The most popular workload category for these instances is likely to be the first one mentioned: machine learning and deep learning. Thanks to the V100’s Tensor Cores, each GPU is able to provide 125 teraflops of performance on these codes. (For you HPC traditionalists, the V100 also offers 15.7 teraflops of single precision and 7.8 teraflops of double precision performance). On real deep learning applications, NVIDIA has demonstrated the V100 delivers three times the performance for training and inferencing, compared to the previous generation P100, which was no slouch when it came to crunching neural networks.

The launch of the P3 instances on EC2 makes good on a promise that Amazon made at the V100 unveiling back in May, at which time Matt Wood, Amazon’s General Manager for Deep Learning and AI, said the they would make the Volta GPUs available as soon as NVIDIA was able to deliver them. As the first cloud provider offering these latest NVIDIA GPUs, Amazon will be able to pick some low-hanging fruit with regard to customers who have been waiting to get their hands on the new silicon.

Presumably both Google and Microsoft will offer the V100 in their respective public clouds at some point, given the customer demand for these GPUs for deep learning work. But it’s worth noting that, unlike Amazon, both of those companies have developed their own accelerator technologies, at least for internal purposes: Google with its Tensor Processing Unit (TPU), and Microsoft with its global deployment of FPGAs. Microsoft has already implied it would be making its FPGA infrastructure available to cloud users at some point in the future, and Google could very well do the same with their proprietary TPUs.

NVIDIA, of course, would prefer that not happen. So it’s no coincidence that in conjunction with the availability of the V100 on Amazon EC2, NVIDIA announced it is making its NVIDIA GPU Cloud (NGC) container registry available on the P3 instances, and will make them available for other clouds as soon as the other providers jump on the V100 bandwagon. The NGC container registry allows users to pull in an optimized stack of deep learning software of their choice for a given cloud platform.

At this point though, only EC2 users will be able to take advantage of these NGC stacks, and, actually, only a subset of those users. Amazon is initially making the P3 instance available in the US East (Northern Virginia), US West (Oregon), EU (Ireland), and Asia Pacific (Tokyo).

These instances don’t come cheap. In the US, the hourly rate is $24.48 for the eight-V100 configuration, and that price nearly doubles in Tokyo. Hourly costs for the one- and four-instance instances are proportionally less. For a complete rundown of pricing options, check out Amazon’s interactive EC2 instances webpage.