Sept. 27, 2016

By: Michael Feldman

Software giant bets the (server) farm on reconfigurable computing

Microsoft has revealed that Altera FPGAs have been installed across every Azure cloud server, creating what the company is calling “the world’s first AI supercomputer.” The deployment spans 15 countries and represents an aggregate performance of more than one exa-op. The announcement was made by Microsoft CEO Satya Nadella and engineer Doug Burger during the opening keynote at the Ignite Conference in Atlanta.

The FPGA build-out was the culmination of more than five years of work at Microsoft to find a way to accelerate machine learning and other throughput-demanding applications and services in its Azure cloud. The effort began in earnest in 2011, when the company launched Project Catapult, the R&D initiative to design an acceleration fabric for AI services and applications. The rationale was that CPU evolution, a la Moore’s Law, was woefully inadequate in keeping up with the demands of these new hyperscale applications. Just as in traditional high performance computing, multicore CPUs weren’t keeping up with demand.

Doug Burger with Microsoft-designed FPGA card

Doug Burger with Microsoft-designed FPGA card

Microsoft’s first serious foray into FPGA came with accelerating Bing’s search ranking algorithm, a cloud application used by hundreds of millions of people. Employing FPGAs programmed for that one piece of code, the company was able to double the throughput on the algorithm at a cost of just 10 percent more power per server. From there Microsoft experimented with convolutional neural networks, using FPGAs to train image classification networks, achieving performance per watt on par with that of the best GPUs at the time.

It should be said that, at least for the time being, Microsoft doesn’t appear to be aiming the FPGA-powered infrastructure at the training of these neural nets. At this point, the company still uses smaller clusters GPUs to perform the more computationally intense training phase offline. And it also offers GPU servers to the public in its Azure offering for things like genome sequencing and other scientific workloads. The FPGA build-out looks to be primarily aimed at inferencing (evaluating already trained neural networks) for applications such as image recognition, speech recognition, language translation and so on. Such a capability requires a much larger infrastructure, one that can evaluate millions of such requests in real time spread across a global cloud.

Conveniently, FPGAs are well suited for such workloads, offering the type low-precision (8-bit and 16-bit) capabilities that these deep learning and other AI algorithms require for optimal efficiency. More importantly, they can do so in a rather small power envelope – on the order of 25 watts per chip. That makes it relatively easy to slide one or more of these devices into a web server, which tend to be power-constrained because of the scale of the datacenters they must run in.

Of course, if you’ve been tracking NVIDIA about their aspirations in web serving AI, you’ll know they offer a 50-watt P4 GPU aimed at roughly the same application set. Microsoft, though, as a result of its broad range of offerings in Windows, Office 365, Azure, Cortana, and Skype, sees the AI space differently. It wants a platform that can support many different kinds of intelligent services and applications – search (text and image), computer vision, natural language processing and translation, recommendation engines – basically anything that that involves advanced analytics and pattern matching driven by web-scale datasets.

For such a diverse set of offerings, FPGA technology has an attraction beyond any CPU, GPU or purpose-built ASIC: reconfigurability. The chips could be programmed to operate as application-specific processor at efficiencies that approach that of a hard-wired ASIC, but with most of the flexibility of a CPU and certainly a GPU. There is a catch though. Programming FPGAs is hard. It involves specifying the gate layouts of the chip, and is therefore a much more difficult undertaking than programming general-purpose microprocessors. Although advances have been made in recent times with OpenCL and other FPGA-friendly compiler technologies, designing applications for one of these devices is not a task for the average software developer.

Microsoft has made the calculation that the added costs of FPGA application development can be amortized over a large infrastructure that can serve millions of customers. And the fact that these chips are reconfigurable means the soft-wired applications running on them can be changed on the fly to accommodate the varying workloads of Microsoft’s cloud. Even better, as new and improved algorithms are developed for these applications, the “hardware” can be upgraded in real time

The fact the Intel has acquired FPGA-maker Altera probably made that calculation for Microsoft that much easier. At the time of the Altera purchase last year, the chipmaker predicted that 30 percent of datacenter servers will be equipped with FPGAs by 2020. Now Microsoft is helping Intel make that prediction come true. Certainly with Intel’s backing of FPGAs for the datacenter, Microsoft can be more confident that there will be an expanding array of software tools and server designs for FPGA-accelerated systems. Intel has already started shipping an integrated “Broadwell” Xeon-FPGA chip, which looks to be the kind of platform that Nadella and company would be especially interested in somewhere down the road.

Which brings us to the actual server design that Microsoft used to build out its FPGA cloud. The original Catapult server was a dual-socket 16-core Xeon box, with 64 GB of RAM and an Altera Stratix V FPGA card. On the storage side, four 2TB SATA disks and two 480GB Micron SSDs fed data to the processors. In August, Microsoft announced Catapult v2. Although information about this server is scant, in this design the FPGAs are connected to the CPU, main memory, and network. As a result, the FPGAs can talk directly to one another, rather than going through the CPU as the intermediary. Such a design is much better for the kind of scaled-out server farm needed for cloud-based machine learning tasks. However, at this point it is unclear what proportion of these second generation Catapult servers make up the Azure cloud.*

In Nadella’s keynote of the new FPGA infrastructure he went through a litany of intelligent cloud applications beyond the usual suspects of Bing search, image recognition and Skype language translation. Part of this involved infusing Office 365 applications with AI smarts, adding features like computational linguistics to Word, and intelligent email triage to Outlook. Another area of focus is Cortana, Microsoft’s personal digital assistant, which uses natural language processing on the front end to and machine learning on the backend to provide something akin to Watson for the masses. It’s included on Windows platforms of all types and is also available on Android devices. The technology can also be accessed via a software suite for businesses that want to integrate Cortana’s AI building blocks into their own software.

Besides being employed for AI work, the FPGAs are also being used to accelerate the Azure’s 25G cloud network. According to Microsoft, that acceleration has delivered a 10x reduction in latency. And apparently this feature is dynamic, so the FPGAs can be employed for network acceleration, machine learning workloads, or both.

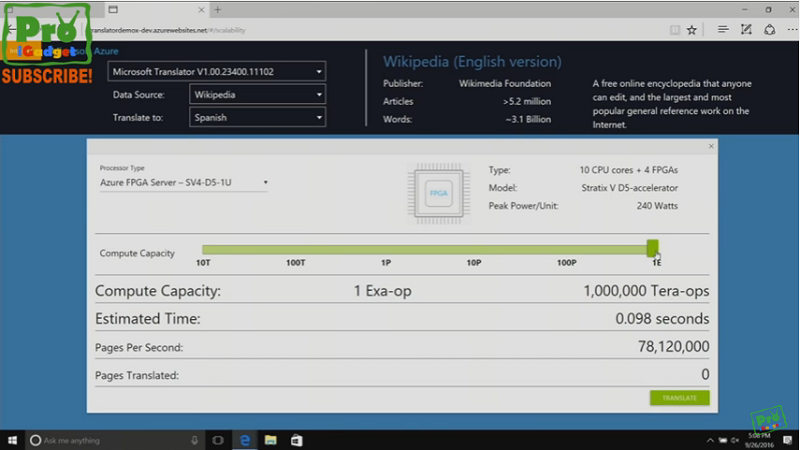

One of the most interesting demonstrations during Nadella’s AI soliloquy was when he brought out Doug Burger from Microsoft Research, who highlighted Azure’s enhanced language translation capabilities. A server equipped with four FPGAs translated all 1,440 pages of the famous Russian novel War and Peace into English in just 2.6 seconds; a single 24-core CPU server took 19.9 seconds and required 60 more watts of power to do so. By the way, the demonstration appeared to use a server with 10 CPU cores and four 30-watt accelerator cards based on the Altera Strata V D5 FPGA. Its peak compute performance was listed at 7.9 tera-ops.

An even more impressive demonstration involved translating the contents of the English version Wikipedia. The same four FGPA cards would take nearly four hours to translate all 3 billion words of text (about a quarter-mile stack of paper if printed out) into another language. But the beauty of this setup is that the whole cloud now has FPGAs, and when Burger took most of the capacity of Azure – about one exa-op worth of compute – and applied it to the Wikipedia job, it ran through the entire text in less than a tenth of a second.

FPGA-driven Wikipedia translation using one exa-op

FPGA-driven Wikipedia translation using one exa-op

Now of course most people aren’t going to be able to use the entire capacity of the Azure cloud, not even for a tenth of a second, but the fact the Microsoft has essentially built the world’s first exascale computer is quite an achievement. Yes, it’s not double precision floating point exaflops, but nonetheless, for machine learning software, an exa-op is a potential game-changer, even if thousands of people are sharing that resource. “That means we have 10 times the AI capability of the world’s largest existing supercomputer,” Burger said. “We can solve problems with AI that haven’t been solved before.”

* In subsequent correspondence with Doug Burger, he told TOP500 News that all of the FPGAs deployed in the cloud build-out used the Catapult v2 design.