Oct. 28, 2016

By: Michael Feldman

The prospect of non-volatile DIMMs as an additional memory tier in the server is getting a lot more attention these days. The advent of novel memory technologies like 3D XPoint, resistive RAM/memristors, and NAND-based memory modules, in conjunction with market forces that are demanding much higher memory capacities, lower power usage, and in some cases, memory persistence, are conspiring to drive a new generation of NVDIMM products to market.

Here we’re not concerned with NVDIMMs using DRAM with batteries or added NAND as a backup in case of a power failure. These are actually more expensive solution than straight DRAM DIMMs and are targeted to a niche market. To become mainstream, NVDIMMs need to be less expensive on a capacity basis than conventional DIMMs. To do this, they will need to employ the more cost-effective non-volatile memory technologies.

Certainly the need exists. Demand for bigger, cheaper memory has been building for awhile, and got a huge boost when “big data” became an industry catch-word. That coincided with a bunch of new datacenter applications that needed to manage large swathes of data in real-time. In turn, that required much larger memory capacities in order to avoid the storage I/O bottleneck (or even the PCIe bottleneck, for that matter). Thus was born in-memory computing.

SAP HANA is the poster child for this trend, but it also includes broader areas like e-commerce processing, social media analytics, and algorithmic trading. Science and engineering applications that tap large datasets like genome analysis and electronic design automation (EDA) can also greatly benefit from an extra-large memory footprint. But even for less I/O-bound applications, increasing memory capacity can often provide a beneficial effect on throughput and efficiency.

For the HPC crowd, the availability of commodity NVDIMMs would certainly be a welcome respite from the widening imbalance between FLOPS and memory capacity. The current top-ranked supercomputer in the world, the 93-petaflop TaihuLight, represents an extreme example of that imbalance at 0.014 bytes per FLOPS. Bucking that trend is the broader use of “fat nodes,” premium-priced HPC servers outfitted with lots of memory, often supplemented by on-board SSDs. That seems to be the result of a greater interest in data-intensive/analytics applications which tend require larger big memory footprints. Pulling in NVDIMMs would keep costs of such nodes in check, while simultaneously simplifying the programming model. In the longer term, NVDIMMs would also be quite useful in future exascale designs, where memory capacity is severely constrained by both hardware and energy costs.

Given that kind of application demand, NVDIMMs are looking pretty attractive right now. Current products on the market use NAND flash, a slower, but much less expensive and more energy-efficient media than DRAM. Others employ a combination of NAND and a smaller amount of DRAM. On the drawing board are ones that use more exotic technologies like ReRAM and 3D XPoint.

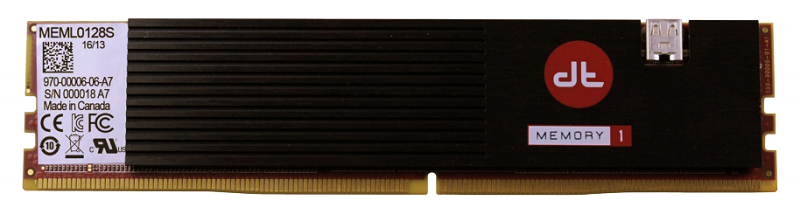

Source: Diablo Technologies

Source: Diablo Technologies

The reason NVDIMMs remain a niche product today is price, since, as we mentioned before, they are based on DRAM, the very technology that needs to be supplanted to contain costs. A number of companies are working to correct this. Among them is Diablo Technologies, which recently announced its DDR4 Memory1 NVDIMM employing NAND flash. The first product became available in September.

Diablo’s approach is to use their NAND DIMMs for capacity and a much smaller amount of conventional DRAM DIMMs to cache memory reads and writes so as to maintain performance and endurance for the NAND components. Diablo software stages the data tiering between the Memory1 and DRAM modules, which is performed transparently to the OS and application. The company recommends an 8:1 ration of Memory1 to DRAM.

Each Memory1 module can hold 128 GB of data, which will allow up to 2 TB of main memory on a typical two-socket serve. A 256 GB module in the pipeline, which should double capacity to 4 TB. All this for what Diablo is claiming “a fraction of the cost of the highest-capacity DRAM DIMMs.” If you don’t think too hard about that statement you might forget that the low-capacity DRAM DIMMs are also a fraction of the cost of high-capacity DRAM DIMMs, even on a dollar per byte basis. Diablo’s advantage is that if your application requires large amounts memory that would have necessitated high-end DIMMs, you’ll save money by using their solution. Moreover, since you can now outfit these servers with a terabyte or more of memory, a greater number of applications should now fit in fewer servers, which should save additional cash. Thanks to the non-volatile nature of NAND, energy savings should be realized as well.

Diablo has some company though. In August, Netlist and Samsung jointly unveiled their DDR4 HybriDIMM, a NAND-DRAM hybrid module that, like the Diablo solution, uses NAND for capacity and DRAM as a caching buffer, along with software to do the data staging. Unlike Diablo though, HybriDIMM integrates both types of media into the same memory module. The first generation offering will provide 256 to 512 GB of NAND along with 8 to 16 GB of DRAM per module. The second generation ups this to 1 TB of NAND and 32 GB of DRAM.

Meanwhile, Viking Technology is collaborating with Sony to build NVDIMMs based on ReRAM, with the Viking supplying the memory module know-how and Sony, the ReRam technology. (Viking already produces an NVDIMM product, with the tongue-twisting name of ArxCis-NV, but that employs NAND-backed DRAM for power failure situations.) Sony, along with other ReRam developers, maintain that that resistive RAM offers better performance and endurance than NAND, althigh still not as good as DRAM. The Sony partnership was announced in August, but no date was offered for a product release.

A startup called Xitore, which came out of stealth mode in February, also uses a hybrid approach with lots of non-volatile memory plus on-module DRAM for data caching. Even though it’s designed to be hooked into a DIMM slot, it’s a block mode device that would be treated like an ultra-fast storage drive rather than as byte-addressable memory. Initial implementations could provide up to 4 TB per module, delivering 4 million IOPS, with a latency of 2 usec. Supposedly it can employ either NAND or any post-NAND type of memory. It’s different enough that Xitore created its own architectural variant, called NVDIMM-X. A product roadmap has not been forthcoming.

The elephant in the room is Intel, who along with its partner, Micron, will offer NVDIMMs based on their much talked-about 3D XPoint memory. Like ReRAM, 3D XPoint is said to offer better performance and endurance than NAND, although the original claims of being 1,000 times faster and more enduring than NAND now appears to be in doubt. The product dates appear to be slipping as well -- out to 2017 for the first Optane SSD product, and 2018 or even later for the first NVDIMMs. We’ll just have to wait and see.

Obviously, we are still early in the game, and the players and product sets could change drastically over the next few years. Overall though, the prospects for broader uptake of these this new class of device is promising. A report published last year by Transparency Market Research, estimates the size of the NVDIMM market should grow from $3.3 million in 2014 to $1.3 billion by the end of 2021, representing a nearly a 400-fold increase over that timeframe. Not all of that revenue is confined to the datacenter, but it will be the largest segment for these products throughout the period studied.