Oct. 3, 2018

By: Michael Feldman

Xilinx has introduced Versal, a new product family based on its heterogeneous Adaptive Computer Accelerator Platform (ACAP). The initial product offerings integrate FPGA technology with Arm CPU cores, DSPs, and AI processing engines. The new platform aims to accelerate a wide array of machine learning and data-intensive workloads running in the datacenter and on edge devices.

Versal – a portmanteau of versatile and universal – represents the commercialization of Xilinx’s “Everest” architecture, an R&D effort that cost the company $1 billion over four years. At its most basic level, ACAP and the initial Versal implemenation combine a number of domain-specific and general-purpose architectures, based on fixed-function hardware, with that of reconfigurable FPGAs.

The approach is a response to processor performance stagnation caused by the death of Dennard scaling in the early 2000’s and the more recent slowdown in the pace of Moore’s Law. Also, according to Xilinx CEO Victor Peng, the processor design cycle is simply too long to keep pace with today’s demand for new solutions. “It is exactly what the industry needs at the exact moment it needs it,” said Peng, who unveiled the Versal product family this week at the Xilinx Developer Forum in San Jose.

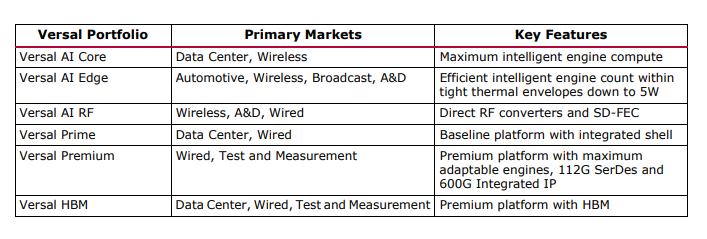

The new Versal offerings are divided into six product series, each of which is targeted to a particular application profile. The first two out of the chute will be the AI Core series, which is aimed at machine learning inference and advanced signal processing, and the Prime series, which is designed for inline acceleration for a variety of datacenter workloads. Future Versal series include AI Edge, AI RF, Premium, and HBM. The entire product set is summarized below.

Ultimately the Versal products will be competing with similar FPGA products from Intel, but Xilinx is currently focused on positioning these devices as more performant alternatives to CPUs and GPUs, which currently dominate the application space being targeted by ACAP. In the datacenter, for example, the company is claiming that for image recognition inferencing, Versal products will run these applications up to 43 times faster than an Intel Xeon Platinum CPU, two to eight times faster than a NVIDIA Tesla V100 GPUs, and five times faster than a standalone FPGA. Likewise, for applications like financial risk analysis, genomics, and elastic search, Versal can outrun conventional CPU implementations by factors of 89, 90, and 91, respectively.

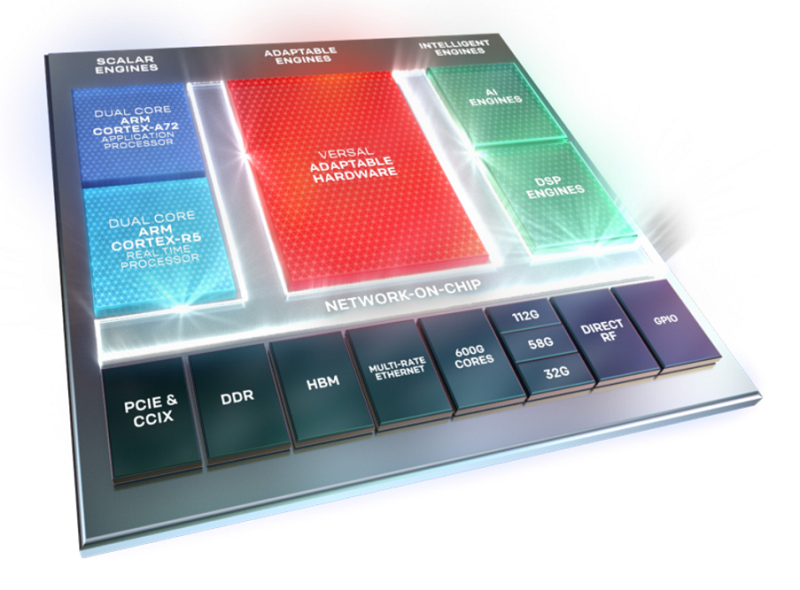

The AI Core series is primarily aimed at the rapidly expanding market for machine learning inference, which according to a recent report by Barclays Research, will grow to a much larger market than machine learning training in the next decade. Products in this series are comprised of an FPGA, 128 to 400 AI Engines, a dual-core Arm Cortex-A72 application processor, a dual-core Arm Cortex-R5 real-time processor, 256KB of embedded memory, and more than 1,900 DSP floating point engines.

The FPGA component, which forms the platform’s Adaptable Engine, incorporates up to 1.9 million system logic cells and nearly 900,000 LUTs. The memory subsystem is made up of more than 130 Mb of UltraRAM, up to 34 Mb of block RAM, and 28 Mb of distributed RAM and 32Mb of Accelerator RAM blocks, which can be accessed from any processor engine on the platform. The series also sports PCIe Gen4 x8 and x16 interfaces, CCIX host interfaces, 32G SerDes, and two to four integrated DDR4 memory controllers. All of these components are glued together with a multi-terabit/sec network-on-chip (NoC).

There are five products in the AI Core series, with the top-of-the-line offering providing 147 peak teraops (TOPs) of INT8 performance for inferencing, most of which are provided by the AI vector processing engines. For the sake of comparison, NVIDIA’s highest performing GPU for inferencing, the new Tesla T4, delivers 130 INT8 TOPs.

The Versal Prime series represent a more general-purpose set of offerings and includes a somewhat larger FPGA (up to 2.1 million system logic cells and 984,000 LUTs) compared to the Core AI products. It also comes with the same basic same Arm processor setup, a good deal more memory capacity, and up to 3,080 DSP engines, but no AI Engine componentry. These products are suitable for applications like medical imaging, network and storage acceleration, communication test equipment, and avionics control.

As you might imagine, programming these complex devices is going to take some doing. To help that along, Xilinx is providing a development environment with the relevant language support (C, C++, and Python), drivers, middleware, libraries and application-specific framework support. The company is promising to release more specifics on the software stack next year.

The Versal AI Core and Prime products are scheduled to be generally available in the second half of 2019, although Xilinx has already engaged “multiple key customers” through an early access program. If any of this piques your interest, the company has published ample documentation on the new product family, which can be found here.