July 26, 2016

By: Michael Feldman

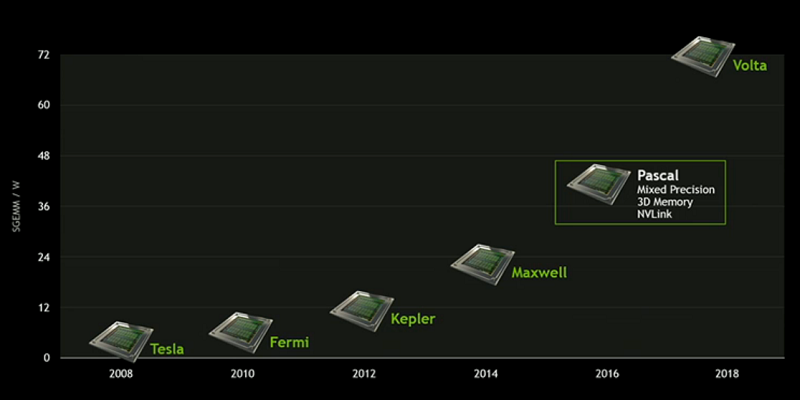

The GPU rumor mill was grinding away this week with talk of an accelerated launch for NVIDIA’s next-generation Volta processor. Volta is the architecture that will succeed the current-generation Pascal design, which is the basis for the Tesla P100 GPUs destined for the HPC and deep learning markets. According to a report in Fudzilla, the first Volta parts may show up in 2017, a year ahead of NVIDIA’s original schedule.

If true, that would represent an unprecedented acceleration of NVIDIA’s normal two-year cadence of introducing new GPU architectures. The first Pascal parts, under the P100 moniker, were introduced this past May at NVIDIA’s GPU Technology Conference (GTC), and they have yet to become generally available. NVIDIA is promising the PCIe version of the P100 to be in production by the fourth quarter of 2016 and the NVLink-enabled version to be generally available at the beginning of 2017.

Fudzilla is basing the accelerated Volta launch on an unnamed source, which indicated the new platform will be manufactured on the same 16nm FinFET technology currently being used for Pascal. The source also said that “the performance per watt is expected to increase tremendously.”

A Volta GPU in 2017 has a few things going against it. For starters, it would shorten the lives of the products based on the Pascal platform, which according to NVIDIA CEO Jen-Hsun Huang, they spend $3 billion to develop. The shortened timeframe would also constrain the number of new features that could be included in Volta (although NVIDIA hasn’t revealed anything much about the architecture’s bells and whistles yet). Finally, increasing performance per watt to any significant extent using the same 16nm manufacturing technology would take some doing – tough, but certainly not impossible.

On the other hand, a 2017 introduction of Volta makes a good deal of sense. One area in particular where the new timeline would match up is the schedule for the US Department of Energy’s 100-plus-petaflop Summit and Sierra supercomputers. Both these systems are using IBM Power9 CPUs accelerated by Volta GPUs, and although the systems are not scheduled to go into production until 2018, installation is set to start in 2017.

NVIDIA has plenty of other motivations to accelerate the roll-out its next architecture. First, in the HPC and deep learning space, Intel is providing new competition with its newly launched “Knights Landing” Xeon Phi processors. Getting a new and improved platform into the market as soon as possible could help NVIDIA keep ahead of Intel in these two coveted markets. NVIDIA seems particularly keen on keeping its dominance in deep learning intact, and could add features and performance improvements geared for this application set.

Competition from AMD, with its upcoming Vega GPU, could also spur NVIDIA to push Volta out more quickly. Vega is AMD’s new high-end GPU architecture destined for the company’s FirePro and Radeon lines, and will use 14nm FinFET and HBM2 memory to crank up performance and energy efficiency. Although AMD has not provided much competition for NVIDIA in HPC and deep learning, AMD’s market share in the consumer/gaming space has been on the rise lately.

The bottom line is that NVIDIA’s preeminent position in HPC, deep learning and the consumer graphics space will be challenged on a couple of fronts over the next year. Whether or not NVIDIA uses a faster cadence to try to maintain its dominance will probably become apparent at next spring’s GTC event.