Jan. 25, 2018

By: Michael Feldman

The Department of Energy (DOE) is soliciting proposals for research projects that will receive early access to Aurora, the first exascale supercomputer to be deployed in the US. There’s one catch though: the DOE is not telling anyone about machine’s architecture.

Aurora, you will remember, was originally going to be a 180-petaflop Intel-Cray system powered by “Knights Hill” Xeon Phi processors. It was scheduled to be deployed at Argonne National Lab this year. But in October of 2017, the DOE rewrote the contract, increasing Aurora’s performance to over one exaflop and moving its installation date out to 2021. A month after that revelation, Intel offhandedly mentioned that they were dumping the Knights Hill product, without providing any assurances for a replacement processor in the Xeon Phi line.

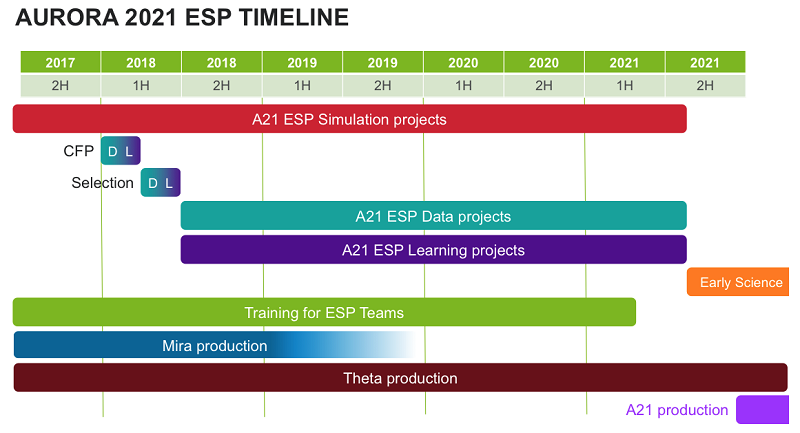

Which brings us to this month’s news of the DOE solicitation. In a nutshell, the agency is asking users to submit project proposals for the country’s first exascale system, what they’re calling the Aurora Early Science Program Data and Learning. Basically, they’re looking for “big data”-type applications that focus on data analytics or some aspect of machine learning as it applies to scientific research. The DOE is particularly interested in applications that encompass “the convergence of simulation, data and learning.” The 10 awardees will get access to Aurora for about three months, from the time between system acceptance in the second half of 2021 and general availability for the wider research community in 2022.

Here’s the thing though: the DOE is saying very little about the design of the system – to either the general public or the proposal submitters. The reason they give is that Aurora’s architecture is protected by a Restricted Secret Nondisclosure Agreement (which we assume is the double-dog dare version of NDAs). “We realize that that this poses a substantial challenge to proposal authors, especially in the areas of Data and Learning, where there is limited history of applications running at leadership scale,” says the solicitation; “and we will take this into account when evaluating proposals.”

This level of concealment is certainly out of the ordinary. For most publicly-funded supercomputers of this size and importance, the basic hardware details are known well in advance. That was certainly the case for the three pre-exascale systems under the DOE’s CORAL program. When those systems were awarded in 2014 and 2015, three years before their scheduled installation dates, the basic processor and interconnect hardware, as well as the node configuration, were known at the time.

One can assume the rationale for all this secrecy is that Intel (and/or Cray) wants to keep its high-performance hardware plans something of a mystery for competitive reasons. Intel, in particular, is locked in a battle with NVIDIA, Mellanox, IBM, ARM vendors, and others on a number of different fronts in the high-performance arena – processors, accelerators, interconnects, memory componentry – and probably believes the less its rivals know about its roadmap, the better.

The solicitation did, however, offer a few tidbits that revealed the general nature of the hardware. Specifically, the text noted that Aurora is “expected to have over 50,000 nodes and over 5 petabytes of total memory, including high bandwidth memory.” That means each node would have to deliver about 20 teraflops to hit that one exaflop mark.

That’s actually not very difficult to achieve, even with current hardware. For example, the Summit supercomputer, which is currently under construction, is outfitted with six NVIDIA V100 GPUs in its Power9 servers, and will achieve more than 40 teraflops per node. (In fact, you could build a Summit-style exascale supercomputer today with half as many nodes as Aurora, although it would probably cost around $1 billion dollars and chew up close to $75 million per year in electricity.) A 20-teraflop node could be powered by say two 10-teraflop Xeon Phi processors or something similar. The real challenge here is to keep the power draw to something in the neighborhood of 400 to 600 watts per node, so you don’t blow past the nominal 20 to 30 MW limit envisioned for the first exascale supercomputers.

The fact that Aurora has a relatively high node count suggests that Intel is confident enough about its next iteration of its Omni-Path interconnect fabric, or whatever replaces it on the company’s secret roadmap, to glue together something of that scale. Almost nothing is known about the second generation of the original Omni-Path technology, but it’s a good bet it will be quite different from its predecessor. Another technology that could make a 50,000-node supercomputer more practical is the integration of Intel’s silicon photonics. That technology is likely to be somewhat more mature in the 2021 timeframe, given that the company’s first-generation products were launched in 2016.

Also, the fact that the DOE is looking for projects emphasizing data analytics and machine learning implies that the Aurora hardware will be particularly optimized for such applications. That suggests that Nervana AI circuitry may find its way into the machine, either integrated into the main processor, or as a standalone accelerator. At the very least, there will be extensive support for reduced-precision arithmetic (32/16/8-bit) in Aurora’s processors, although that’s already the case in both the Xeon and Xeon Phi lines.

Also of interest in this regard is Intel’s 3D XPoint memory, a technology that provides non-volatile storage at something approaching DRAM speed. With just 5 petabytes of main memory to serve one exaflops worth of processors (a flops-per-bytes ration of 200!), there’s a chance Aurora could employ this technology in NVDIMMs or SSDs as a low-latency storage tier adjacent to the system’s DRAM. That could provide these data-intensive applications with access to much more expansive memory, albeit at somewhat slower transfer speeds than main memory. Intel could also employ 3D XPoint in the processor’s’ high-bandwidth memory (HBM) modules, combining the high capacity and non-volatility of 3D XPoint with the superior bandwidth inherent in HBM designs.

At least for the 10 project winners, the hardware guessing game will end in June. That’s when the DOE is scheduled to select the Early Science Program awardees. At that point, the project teams will be able to learn about the Aurora architecture after signing the appropriate RSNDA agreements with Intel and Cray, which should give the developers plenty of time for training and figuring out how to tweak their software for Aurora before it arrives in 2021.

Neither the DOE nor the vendors have said when they will release the architectural details for Aurora to the general public.