Dec. 5, 2017

By: Michael Feldman

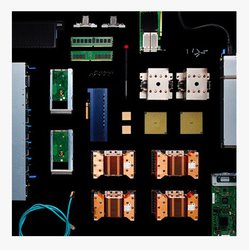

IBM today unveiled its first Power9-based server, the AC922, which the company is promoting as a platform for AI workload acceleration. The new dual-socket server was announced in conjunction with the official launch of the Power9 processor.

The details of the chip itself have been known for well over a year. (We reviewed the processor back in August 2016.) To summarize: the Power9 is built on 14nm FinFET technology and is comprised of more than 8-billion transistors. It’s equipped with 12 to 24 cores, although the initial versions used in AC922 are only available in configurations of 16, 18, 20 and 22 cores.

The details of the chip itself have been known for well over a year. (We reviewed the processor back in August 2016.) To summarize: the Power9 is built on 14nm FinFET technology and is comprised of more than 8-billion transistors. It’s equipped with 12 to 24 cores, although the initial versions used in AC922 are only available in configurations of 16, 18, 20 and 22 cores.

Thanks to the Power9’s four-way simultaneous multi-threading (SMT) capability, these chips offers about twice the number of threads as their x86 competition. For purely compute-bound work, the SMT capability is of little use, but for applications where threads end up waiting for memory or I/O a lot, it can significantly increase throughput.

The IBM announcement didn’t talk much about the memory subsystem in the AC922, but we can assume that it’s based on the scale-out version of the Power9 processor, which is the one for single- and dual-socket nodes. This version supports direct attached memory via eight DDR4 ports, with each socket providing up to 120 GB/sec of sustained bandwidth. Power processors have historically been well-endowed with memory bandwidth, and this one is no exception.

Probably the Power9’s biggest standout feature is its on-chip support for multiple device interconnect protocols. These include PCIe gen4, CAPI 2.0, OpenCAPI, and NVLink 2.0. The rationale here is to make the platform broadly accessible to accelerators, while providing the fastest connectivity speeds possible.

The second-generation NVLink support exists solely to maximize connectivity to NVIDIA’s V100 GPUs, which according to IBM can provide a CPU-to-GPU data transfer rate of up to 150 GB/sec. That’s nearly 10 times faster than what is possible on a generic server, where the GPU connected to a host processor using 16 lanes of PCIe gen3. The ultra-speedy NVLink will enable the V100 to share the server’s more capacious system memory, making it possible for the GPU to access larger datasets more transparently, avoiding the overhead of copying data into the graphic card’s local memory. It’s the kind of differentiation that can get lost in the technical weeds, but can have a profound impact on application performance.

For a more standard device interconnect, there are also hooks for PCIe gen4, which provides twice the data throughput of gen3. Although it’s not nearly as fast as NVLink, gen4 has the advantage of compatibility with a much larger ecosystem. Currently, it’s not supported on very many devices, but two where it is already present could be immediately useful for prospective AC922 customers. Mellanox’s new 200Gbps HDR InfiniBand adapters (ConnectX-6) and Xilinx Ultrascale FPGAs will both come with built-in PCIe gen4 connectivity, so will be able to take full advantage of the faster lanes available on the Power9.

The practical utility of CAPI and OpenCAPI remains to be seen, although it’s worth noting that Mellanox, Google, AMD, NVIDIA, Xilinx, Western Digital and Micron are all board-level members of the OpenCAPI consortium, so something interesting might be in the works.

With such a feature set, it’s no surprise that the Power9 will soon be powering two of the most powerful supercomputers in the world: the US Department of Energy’s Summit and Sierra supercomputers. In these systems, the new chip will act as the host processor for the NVIDIA V100 Tesla GPU, which will supply the majority of flops for these machines. Summit will be a 200-plus petaflop system installed at Oak Ridge National Laboratory, and is expected to be the fastest machine on the planet when it’s completed next year. Its smaller sibling, Sierra, will top out at about 125 petaflops and will be deployed at Lawrence Livermore National Laboratory. Both systems are currently under construction and are expected to become operational in the first half of 2018.

With such a feature set, it’s no surprise that the Power9 will soon be powering two of the most powerful supercomputers in the world: the US Department of Energy’s Summit and Sierra supercomputers. In these systems, the new chip will act as the host processor for the NVIDIA V100 Tesla GPU, which will supply the majority of flops for these machines. Summit will be a 200-plus petaflop system installed at Oak Ridge National Laboratory, and is expected to be the fastest machine on the planet when it’s completed next year. Its smaller sibling, Sierra, will top out at about 125 petaflops and will be deployed at Lawrence Livermore National Laboratory. Both systems are currently under construction and are expected to become operational in the first half of 2018.

Apparently, the DOE supers will use the same AC922 servers that IBM will be selling to enterprises, which is interesting inasmuch as for the previous Power8 systems, IBM offered a specific set of platforms for the HPC crowd, namely, the S812LC, S822L, S812L, and S824L. The customer overlap for the AC922 could be attributed to how AI and data analytics workloads in the enterprise are now demanding pretty much the same sort of high performance componentry as standard HPC applications.

Generally speaking, the AC922 is suited to data-intensive and compute-intensive applications of all sorts, especially those that can be accelerated with coprocessor silicon. IBM points to Kinetica, a GPU-accelerated database management system, as an example of a more traditional commercial application that is a good match for the AC922. Along those same lines, the platform should be equally adept at things like real-time fraud detection, credit risk analysis, or a host of other HPC applications where data analytics is the dominant computation.

That said, IBM’s main focus here is AI, specifically the kind of deep learning applications that can be accelerated with NVIDIA latest GPUs. The server can house up to six of the V100 devices, which provides 750 teraflops of (16/32-bit) deep learning performance per enclosure. To better extract those flops, IBM is touting its PowerAI package, a suite of deep learning frameworks targeted to enterprise users with Power-based servers accelerated by NVLinked graphics chips. It provides optimized versions of popular frameworks like TensorFlow, Caffe, and Chainer.

Although it offers a unique feature set, the AC922 faces entrenched competition from other vendors offering x86 gear (with or without GPU acceleration) aimed at this same market space. Besides the high-profile Summit and Sierra deployments, IBM hasn’t revealed any other early customers for this platform. And while the Power9/V100 platform offers more performance and flexibility than the previous-generation Power8/P100 servers, it remains to be seen if customers are willing pay for it. We’ll soon find out.