Aug. 30, 2018

By: Dairsie Latimer, Technical Advisor, Red Oak Consulting

With a share price riding high and dominance in the datacentre market, it may seem perverse to state that Intel is a company facing a range of significant problems. So what caused the technology behemoth on the occasion of its 50th birthday to find itself so spectacularly on its back foot?

Andy Grove’s famous maxim, “Success breeds complacency. Complacency breeds failure. Only the paranoid survive.” has proven accurate once again. Intel again finds itself at a classic Grove strategic inflection point. The problem is, it doesn’t look like they quite know how to manage it.

Andy Grove’s famous maxim, “Success breeds complacency. Complacency breeds failure. Only the paranoid survive.” has proven accurate once again. Intel again finds itself at a classic Grove strategic inflection point. The problem is, it doesn’t look like they quite know how to manage it.

Tick-Tock

What is most surprising for a long-term observer is that Intel has let slip what was seen as a perpetual two-year (full node) lead in process technology over its foundry competitors. The loss of process leadership – let’s call it the 10 nm fumble – is doubly strange, as the recently departed CEO started out at Intel as a process engineer. Surely a core tenet would have been to ensure that the golden goose kept on laying?

Did Intel become complacent or did they just fumble the ball? It’s been clear for years that process transitions were getting increasingly challenging. Even five years ago, the cadence of process transition that drives Moore’s Law was visibly and rapidly slowing. After Dennard scaling fell by the wayside in the mid-00’s, engineers have had to pull an increasing number of rabbits from their hat to keep Moore’s Law on track.

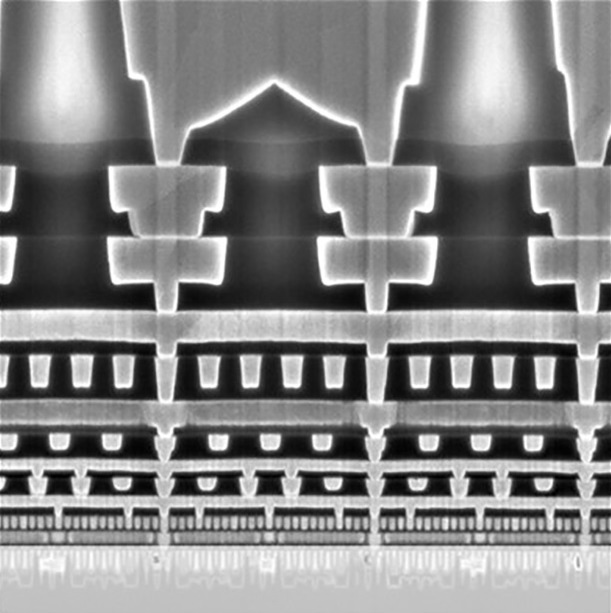

When you are fighting physics, a huge number of disciplines and developments have to align to make it work at all. More revolutionary, rather than just evolutionary, changes are increasingly required to make the next process nodes viable. In the last decade alone, major new innovations include the use of immersion lithography, FinFETs and multi-patterning. In the next few years we will see the widespread adoption of Extreme ultraviolet lithography (EUV), more exotic materials and nanowires with gate-all-around structures for transistors. Worryingly, all these changes will make the job of characterising and quality assuring the products an increasingly difficult and expensive exercise.

Fast forward to 2014, when the period between the general availability of consumer parts (smaller and thus more likely to yield on new process nodes) and Xeons (larger, more complex, closer to the edge of the yield curve) on the same lithographic node was opening fast. The 22 nm to 14 nm transition had been far from smooth and most observers felt that the 10 nm transition would be at least as challenging.

In the HPC market, the general availability of Broadwell Xeons, promised in 2013 and Skylake Xeons, promised in 2015, was delayed. The public abandonment of the ‘Tick-Tock’ strategy in 2016, which was first adopted in 2007, had been showing signs of stress for several years, portending significant changes afoot.

Post Prescott (Netburst architecture on 90 nm), and indeed prior to that, Intel had been almost metronomic in its execution of process transitions. In fact, much of the recent success of the company, from the relative doldrums of the mid to late 00’s, had been predicated on this continued process and manufacturing superiority. Being, in effect, a full process node ahead of your competitors with industry leading yields gave Intel the whip hand when it came to processor design, cost optimisation and gross profit margins. Of course this was helped significantly by AMD’s own fumbling execution with Barcelona and Bulldozer Opterons.

With Tick-Tock, Intel would introduce a new lithographic process node in one product cycle (the ‘tick’) and then an upgraded microarchitecture in the next product cycle (the ‘tock’). Its replacement is a less aggressive three-element cycle, to be known as “Process-Architecture-Optimization”, and a tacit admission that the Moore’s Law cycle was lengthening.

With the benefit of two years hindsight, there have been two optimisation steps and significant improvements at 14 nm, the so called 14 nm+ and 14 nm++ nodes, and a third on the roadmap trying to bridge the gap to the AWOL 10 nm process. Due to high performance computing riding the coat tails of consumer development, Intel’s HPC roadmap is being pushed out further to the right. It also means that far from having consumer developments drive process and manufacturing innovation, HPC will now find itself pushed even further towards the haemorrhaging edge of technology.

Off the flame-grill and into the fire

If the 00’s were characterised by relentless process transitions, the early 10’s clearly signalled the beginning of the end for traditional CMOS scaling, with Moore’s Law moving from the waiting room to admission into a high dependency unit.

Make no mistake; a missing or late process node can leave chunks of your business model in tatters. Every Intel product whose starting assumption was that it would be fabricated at 10 nm was immediately at risk. Perhaps this is where the complacency set in, as when you already have a high yielding products at 14 nm that have no direct market competition, such as Skylake, then it simply does not make much economic sense to release a 10 nm product before it’s yields cross over and deliver better levels of transistors per dollar.

Intel hasn’t publically disclosed all the new tricks they were planning on using for its 10 nm process, so it’s hard to do more than speculate about which are causing the most yield issues. The likelihood is that there are a range of new developments, including the use of new materials for contacts and metal layers, self-aligned quad patterning used by Intel to resolve finer feature pitches, and a whole host of other sub-threshold production and reliability issues, all of which are conspiring to make 10 nm not just relatively expensive in the best case, but also making current yields simply unpalatable for Intel.

The investment already made in 10 nm will be huge, likely double-digit billions by the time you factor in everything from R&D to retrofitting fabs. Intel is stuck with trying to make it work, even imperfectly, as witnessed by its most recent public plan of record. Any significant changes to the 10 nm process will come with commensurate delays, additional costs and production problems which mean the characterisation and quality assurance for 10 nm parts will have start again.

This explains the new timescales of late 2019 for any volume availability of consumer parts on 10 nm, and that’s assuming they have all the major fixes to the process already locked in. In its current form, even this may prove to be optimistic. My personal prediction is not to expect the 10 nm Ice Lake-SP Xeons until Q3 2020, and even that’s subject to the usual caveats around Intel roadmap rejigs. In fact, we may even have to wait to see an optimization phases at 10 nm (a 10 nm+) before an Ice Lake-SP appears.

Also, if Intel has found 10 nm challenging to deliver with its chosen technology stack, then what odds that its 7 nm process, as well as everyone else’s sub-7 nm, will have a similarly difficult gestation?

EUV has finally been locked into most foundry roadmaps at 7 nm – and indeed Intel is a large investor in the technology – so given the apparent issues with self-aligned quad patterning at 10 nm, Intel at 7 nm will be using EUV and trading off wafers starts per hour with less process steps and lower defect densities like everyone else. But how many of the other technological developments on its roadmap have failed to materialise at 10 nm?

Other foundries appear to have been more conservative in their process roadmaps, choosing a process of continuous refinement rather than what appears to be a sort of a big bang approach intended to deliver a decisive scaling advantage over the competition. Worryingly for Intel, fixing its 10 nm process may also draw away resources from its 7 nm development efforts so there will probably be another sting in the tail of the 10 nm fumble.

All this delivers another blow to Intel’s attempt to diversify and leverage its process advantage, by spinning up a foundry business. In addition, a delayed or missing process node guarantees an erosion of profit margin since you can’t be as competitive on cost or transistor density, which Moore’s Law now uses as a proxy for performance. To all intents and purposes, Intel has stalled on the Moore’s Law curve – duly flattened and extended and explained as hyper-scaling – and its foundry competitors have more or less caught up or perhaps even just nudged ahead of Intel. This comes just at the moment when Intel’s CPU families really need a shot of 10 nm process vitamins to stave off a resurgent AMD and the anticipated entry of cost-optimised ARM-based CPUs into the datacentre market.

Would you like chips with that?

The costs associated with low geometry semiconductor manufacturing are rapidly escalating and while the issues with 10 nm will hurt Intel, they will also accelerate the need for new approaches to the continued evolution of processors. Intel has already signalled some of these changes with its investments in Embedded Multi-die Interconnect Bridge (EMBI) packaging, silicon photonics, and a renewed focus on tools to help engineer for ever better software efficiency (read multicore scaling).

Post 7 nm is where the crystal ball gets decidedly cloudy in terms of continued silicon scaling. While there will continue to be new tricks to play for 2D semiconductor devices, such as nanowires and gate-all-around architectures for gates, even with EUV widely used, there does appear to be a scaling wall.

Monolithic 3D silicon fabrication for logic, and the integration of logic and memory in monolithic 3D semiconductor production, similar in some ways to the advent of 3D NAND, is clearly one possible approach of extending the post-Moore’s Law runway. New ways of die stacking, packaging and cooling will also deliver greater effective density and therefore performance, and we will see this first in HPC and associated disciplines. Inevitably, package and test for these denser solutions will start to dominate the cost of production, but not necessarily design, and before they become economically viable, new EDA, test and inspection tools will certainly have to be developed and be widely available.

Predictably all of this this will have significant impact for the HPC community so, buckle up gang; it’s going to get interesting!

About the author

Dairsie has a somewhat eclectic background, having worked in a variety of roles on supplier side and client side across the commercial and public sectors as a consultant and software engineer. Following an early career in computer graphics, micro-architecture design and full stack software development; he has over twelve years of experience in the HPC sector, ranging from developing low-level libraries and software for novel computing architectures to porting complex HPC applications to a range of accelerators. Dairise joined Red Oak Consulting @redoakHPC in 2010 bringing his wealth of experience to both the business and its customers.