Feb. 23, 2018

By: Michael Feldman

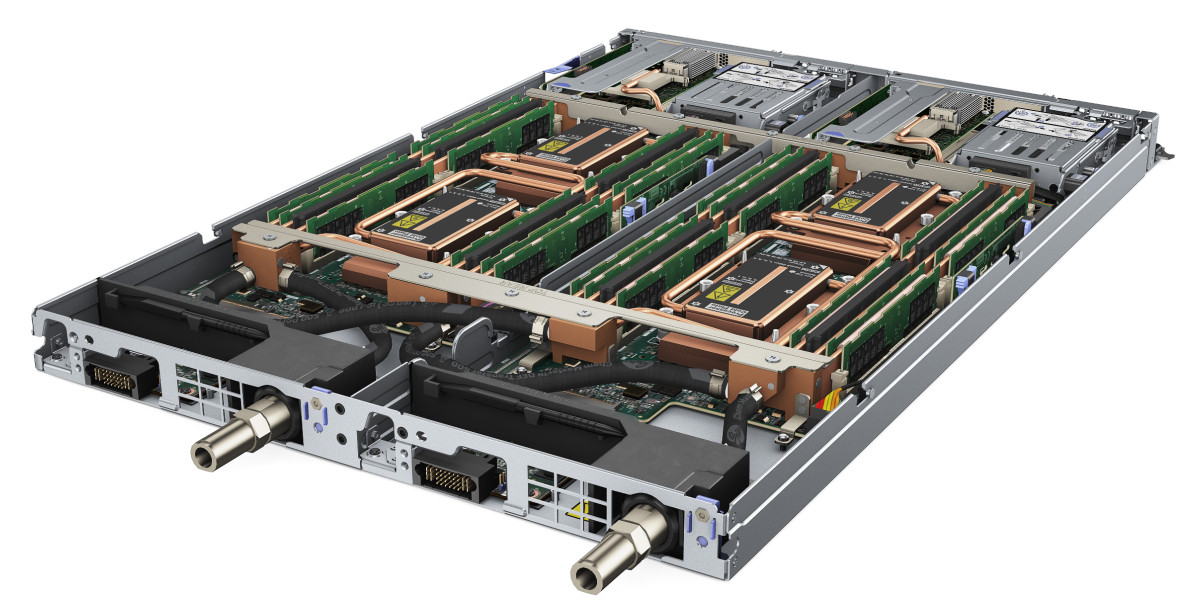

Lenovo has unveiled the ThinkSystem SD650, a densely constructed, direct water cooled server aimed at the HPC market. Its first big test will come later this year when it's deployed in Germany's most powerful supercomputer, the SuperMUC-NG.

The SD650 is the latest in the ThinkSystem product line, which was launched last summer in conjunction with the release of Intel’s “Skylake” Xeon Scalable Processor (Xeon SP). The portfolio spans rack and blade constructions for various markets, but in the case of the SD650, Lenovo has designed a box that is more purpose-built for HPC, especially for those environments where cooling costs and energy consumption are paramount.

The SD650 is the latest in the ThinkSystem product line, which was launched last summer in conjunction with the release of Intel’s “Skylake” Xeon Scalable Processor (Xeon SP). The portfolio spans rack and blade constructions for various markets, but in the case of the SD650, Lenovo has designed a box that is more purpose-built for HPC, especially for those environments where cooling costs and energy consumption are paramount.

The server can be outfitted with either Platinum or Gold Xeon XP processors, and since it's a dual-socket design means, can get up to 56 cores per server if you opt for the big 28-core chips. At least for the time being, GPUs are not supported. But thanks to the use of high-end Xeon chips, they will barely be missed. Using the fastest Platinum chip, the Xeon 8180, an SD650server can deliver 4 teraflops of peak performance, which until a year or so ago was only possible with graphics coprocessors, Xeon Phi processors, or some other sort of accelerator.

Network adapter support extends to both InfiniBand or Omni-Path, with Ethernet ports relegated to management functions. Memory on the SD650 maxes out at 768 GB, spread across 12 DIMM slots – 6 per socket. It also includes four additional DIMM slots for 3D XPoint non-volatile memory, which is planned to be supported on future processors. Internal storage can be had in the form of SSDs, either with two SATA drives and one NVMe drive per server.

Two servers take up just 1U of space and 12 can be squeezed in Lenovo’s 6U n1200 chassis. That puts a lot of computational and storage density in a rather compact space, which points to one of the SD650’s bigger selling points and its real claim to fame: its warm water cooling system. In this case, water is used to provide direct cooling to the CPUs. memory modules and storage drives, which just happen to be the components that generate most of the heat. In the SD650 design, inlet temperatures can be as high as 45°C, and in some cases 50°C, which means little if any chilled water is needed to keep things running smoothly.

Thanks to the direct water cooling, Lenovo says they're able to reduce power consumption by 30 to 40 percent compared to traditional air cooling, and because water is a much better conductor of heat than air, the system components can operate at lower temperatures – up to 20°C lower, by Lenovo’s estimate. Plus, the resulting outlet water can be piped away to heat other parts of the facility.

Thanks to the direct water cooling, Lenovo says they're able to reduce power consumption by 30 to 40 percent compared to traditional air cooling, and because water is a much better conductor of heat than air, the system components can operate at lower temperatures – up to 20°C lower, by Lenovo’s estimate. Plus, the resulting outlet water can be piped away to heat other parts of the facility.

The HPC angle here is that since the SD650’s cooling system is able to consistently keep the CPUs from getting too hot, they can be run in turbo mode continuously. Avoiding overheated silicon is becoming more and more important, since as processors become more computationally dense, they are forced to throttle back as temperatures rise. In the case of the Gold and Platinum Xeon SP CPUs, the turbo mode enables the clock to be boosted about 500 MHz over the baseline frequency when all cores are in use. And when fewer cores are employed, the clock can be hiked up as much 2000 MHz. As you might imagine, being able to operate at these higher speeds enables significantly better performance and throughput.

Apparently, the impetus behind the SD650 was the result of a collaboration between Lenovo and the Leibniz Supercomputing Center (LRZ), which was planning to increase its supercomputing capacity quite substantially. The challenge in Germany is that energy is a rather expensive commodity, averaging €0.16-0.18 per kilowatt-hour. That's about two to three times what US customers pay.

In a blog posted on February 22, Lenovo thermal architect Vinod Kamath runs the numbers:

With air cooling, a data center consumes about 60% of the server power to cool the servers, compared to 40% with chilled water, and less than 10% with warm water like the SD650. The potential savings for an HPC cluster that has a power consumption of 4-5 MW, such as LRZ, can be substantial over its typical 4-5 year operating life with €100,000’s savings per year.

Combined with the computational density afforded by Xeon SP processor, Lenovo was able to design a supercomputer for LRZ that would deliver 26.7 peak petaflops – an order of magnitude increase in computational capacity for the center – without having to upgrade the existing chilled water infrastructure. That system, known as SuperMUC-NG, is scheduled for boot-up this year. Not only will it be the most powerful supercomputer in Germany, it will be the most powerful system ever built by Lenovo.

If you’re interested in seeing the SD650 in action, check out the Lenovo video below. It’s reasonably hype-free and presents some interesting engineering detail with regard to the direct water cooling system.

<iframe src="https://www.youtube.com/embed/s4Ep442cn7I" width="672" height="378" frameborder="0" allowfullscreen="allowfullscreen"></iframe>