Feb. 17, 2017

By: Michael Feldman

The Tokyo Institute of Technology, also known as Tokyo Tech, has revealed that the TSUBAME 3.0 supercomputer scheduled to be installed this summer will provide 47 half precision (16-bit) petaflops of performance, making it one of the most powerful machines on the planet for artificial intelligence computation. The system is being built by HPE/SGI and will feature NVIDIA’s Tesla P100 GPUs.

Source: Tokyo Institute of Technology

Source: Tokyo Institute of Technology

For Tokyo Tech, the use of NVIDIA’s latest P100 GPUs is a logical step in TSUBAME’s evolution. The original 2006 system used ClearSpeed boards for acceleration, but was upgraded in 2008 with the Tesla S1040 cards. In 2010, TSUBAME 2.0 debuted with the Tesla M2050 modules, while the 2.5 upgrade included both the older S1050 and S1070 parts plus the newer Tesla K20X modules. Bringing the P100 GPUs into the TSUBAME lineage will not only help maintain backward compatibility for the CUDA applications developed on the Tokyo Tech machines for the last nine years, but will also provide an excellent platform for AI/machine learning codes.

In a press release from NVIDIA published Thursday, Tokyo Tech’s Satoshi Matsuoka, a professor of computer science who is building the system, said, “NVIDIA’s broad AI ecosystem, including thousands of deep learning and inference applications, will enable Tokyo Tech to begin training TSUBAME 3.0 immediately to help us more quickly solve some of the world’s once unsolvable problems.”

For Tokyo Tech’s supercomputing users, it’s a happy coincidence that the latest NVIDIA GPU is such a good fit with regard to AI workloads. Interest in artificial intelligence is especially high in Japan, given the country’s manufacturing heritage in robotics and what seems to be almost a cultural predisposition to automate everything.

When up and running, TSUBAME 3.0 will operate in conjunction with the existing TSUBAME 2.5 supercomputer, providing a total of 64 half precision petaflops. That would make it Japan’s top AI system, although the title is likely to be short-lived. The Tokyo-based National Institute of Advanced Industrial Science and Technology (AIST) is also constructing an AI-capable supercomputer, which is expected to supply 130 half precision petaflops when it is deployed in late 2017 or early 2018.

Although NVIDIA and Tokyo Tech are emphasizing the AI capability of the upcoming system, like its predecessors, TSUBAME 3.0 will also be used for conventional 64-bit supercomputing applications, and will be available to Japan’s academic research community and industry partners. For those traditional HPC tasks, it will rely on its 12 double precision petaflops, which will likely earn it a top 10 spot on the June TOP500 list if they can complete a Linpack run in time.

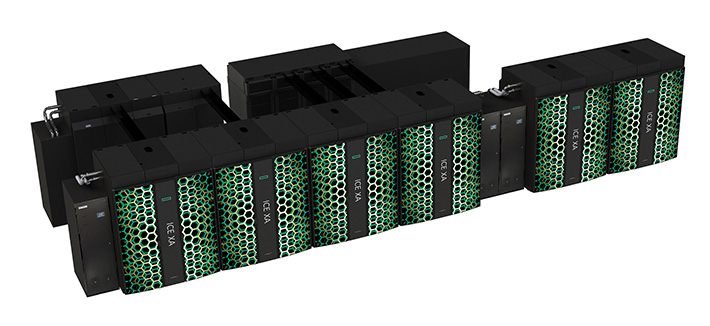

The system itself is a 540-node SGI ICE XA cluster, with each node housing two Intel Xeon E5-2680 v4 processors, four NVIDIA Tesla P100 GPUs, and 256 GB of main memory. The compute nodes will talk to each other via Intel’s 100 Gbps Omni-Path network, which will also be extended to the storage subsystem.

Speaking of which, the storage infrastructure will be supplied by Data Direct Networks (DDN) and will provide 15.9 petabytes of Lustre file system capacity based on three ES14KX appliances. The ES14KX is currently DDN’s top-of-the-line file system storage appliance, delivering up 50 GB/seconds of I/O per enclosure. It can theoretically scale to hundreds of petabytes, so the TSUBAME 3.0 installation will be well within the product’s reach.

Energy efficiency is also like to be a feature of the new system, thanks primarily to the highly proficient P100 GPUs. In addition, the TSUBAME 3.0 designers are equipping the supercomputer with a warm water cooling system and are predicting a PUE (Power Usage Effectiveness) as high as 1.033. That should enable the machine to run at top speed without the need to throttle it back during heavy use. A top 10 spot on the Green500 list is all but assured.