Aug. 15, 2018

By: Michael Feldman

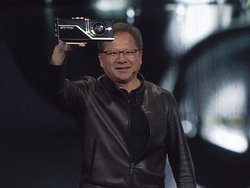

In a bid to reinvent computer graphics and visualization, NVIDIA has developed a new architecture that merges AI, ray tracing, rasterization, and computation.The new architecture, known as Turing, was unveiled this week by NVIDIA CEO Jensen Huang in his keynote address at SIGGRAPH 2018.

In a bid to reinvent computer graphics and visualization, NVIDIA has developed a new architecture that merges AI, ray tracing, rasterization, and computation.The new architecture, known as Turing, was unveiled this week by NVIDIA CEO Jensen Huang in his keynote address at SIGGRAPH 2018.

Calling it the greatest leap in the graphics processor since the CUDA GPU was introduced in 2006, Huang told the audience the new Turing GPUs will enable designers and artists to render photorealistic scenes in real time. “This fundamentally changes how computer graphics is going to be done,” he said. “It’s a step function in realism.”

The first products incorporating the architecture will be a new line of Quadro RTX Professional GPUs. True to its name, the GPUs are aimed at professionals doing video content creation, and automotive and architectural design, as well as researchers doing scientific visualization. The GPUs will be available in both workstations and servers, beginning in the fourth quarter of the year. The new product set is summarized thusly:

GPU Memory Ray Tracing CUDA Cores Tensor Cores Estimated Price

Quadro RTX 8000 48GB 10 Giga-rays/sec 4,608 576 $10,000

Quadro RTX 6000 24GB 10 Giga-rays/sec 4,608 576 $6,300

Quadro RTX 5000 16GB 6 Giga-rays/sec 3,072 384 $2,300

NVIDIA will also be launching its own RTX Server based on the top-of-the-line Quadro RTX 8000 GPU. This box is designed to be the basis of render farms, but because each of the servers is so powerful, they can dramatically reduce the size and cost of the infrastructure. NVIDIA claims four RTX servers, equipped with eight GPUs apiece, can provide the same rendering throughput as 240 dual-socket servers powered by Skylake CPUs. According to NVIDIA’s calculations that will reduce the capital cost from $2 million to $500,000, while reducing the power required from 144 to 13 kilowatts.

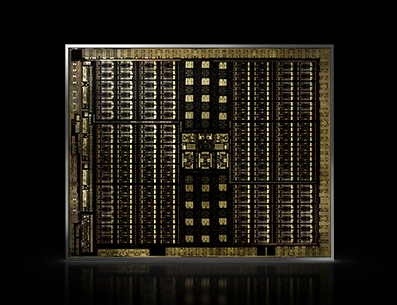

Although Huang didn’t reveal all the technical specs of the new GPUs, he did sketch out the Turing design in some detail, especially with regard to its multi-function design. This is reflected in the different hardware elements of the GPU, which includes a streaming multiprocessor (SM) for compute and shading, Tensor Cores for deep learning/AI, and RT Cores for ray-tracing. The chip itself is comprised of up to 18.6 billion transistors on 754 mm2 die. That’s nearly the same size as a Tesla V100 GPU, which sports 21.1 billion transistors on an 815 mm2 die.

Although Huang didn’t reveal all the technical specs of the new GPUs, he did sketch out the Turing design in some detail, especially with regard to its multi-function design. This is reflected in the different hardware elements of the GPU, which includes a streaming multiprocessor (SM) for compute and shading, Tensor Cores for deep learning/AI, and RT Cores for ray-tracing. The chip itself is comprised of up to 18.6 billion transistors on 754 mm2 die. That’s nearly the same size as a Tesla V100 GPU, which sports 21.1 billion transistors on an 815 mm2 die.

The Turing silicon will be backed by a software stack that taps into the capabilities of the new GPUs. Know as NVIDIA RTX, the software includes support for Pixar’s Universal Scene Description (USD) and the Material Definition Language (MDL), as well as APIs for rasterization, ray tracing (OptiX, DXR, and Vulkan), simulation (PhysX, FleX and CUDA 10), and AI (NGX SDK).

A key element in the Turing architecture is the RT Cores, a specialized bit of circuitry that enables real-time ray tracing for accurate shadowing, reflections, refractions, and global illumination. Ray tracing essentially simulates light, which sounds simple enough, but it turns out to be very computationally intense. As the above product chart shows, the new Quadros can simulate up to 10 billion rays per second, which would be impossible with a more generic GPU design.

The on-board memory is based on GDDR6, which is something of a departure from the Quadro GV100, which incorporated 32GB of HBM2 memory. Memory capacity on the new RTX processors can be effectively doubled by hooking two GPUs together via NVLink, making it possible to hold larger images in local memory.

As usual, the SM will supply compute and graphics rasterization, but with a few twists. With Turing, NVIDIA has separated the floating point and integer pipelines so that they can operate simultaneously, a feature that is also available in the Volta V100. This enables the GPU to do address calculations and numerical calculation at the same time, which can be big time saver. As a result, the new Quadro chips can deliver up to 16 teraflops and 16 teraops of floating point and integer operations, respectively, in parallel. The SM also comes with a unified cache with double the bandwidth of the previous generation architecture.

Perhaps the most interesting aspect to the new Quadro processors is the Turing Tensor Cores. For graphics and visualization work, the Tensor Cores can be used for things like AI-based denoising, deep learning anti-aliasing (DLAA), frame interpolation, and resolution scaling. These techniques can be used to reduce render time, increase image resolution, or create special effects

The Turing Tensor Cores are similar to those in the Volta-based V100 GPU, but in this updated version NVIDIA has significantly boosted tensor calculations for INT8 (8-bit integer), which are commonly used for inferencing neural networks. In the V100, INT8 performance topped out at 62.8 teraops, but in the Quadro RTX chips, this has been boosted to a whopping 250 teraops. The new Tensor Cores also provide an INT4 (4-bit integer) capability for certain types of inferencing work that can get by with even less precision. That doubles the tensor performance to 500 teraops – half a petaop. The new Tensor Cores also provide 125 teraflops for FP16 data – same as the V100 – if for some reason you decide to use the Quadro chips for neural net training.

Given NVIDIA’s interest in the market for large-scale inferencing in the datacenter, this enhanced capability for low-precision integer math is likely to show up in the Tesla GPU line in the not-too-distant future. The last NVIDIA products specifically aimed at this market are the Pascal-based P4 and P40 GPUs. And even though the V100 offers a good amount of capability in this area, it’s a rather expensive and high-wattage solution for dedicated inferencing.

It remains to be seen whether the NVIDIA comes out with a full-blown Tesla product, incorporating other elements of the Turing architecture. One thing seems certain though: as GPUs expand into applications like AI, HPC, and now real-time rendering, the company is building more specialized hardware to meet the performance demands. And given NVIDIA’s success in these areas, it seems to have chosen the right approach.