July 3, 2017

By: Michael Feldman

For all the supercomputing trends revealed on recent TOP500 lists, the most worrisome is the decline in performance growth that has taken place over the over the last several years – worrisome not only because performance is the lifeblood of the HPC industry, but also because there is no definitive cause of the slowdown.

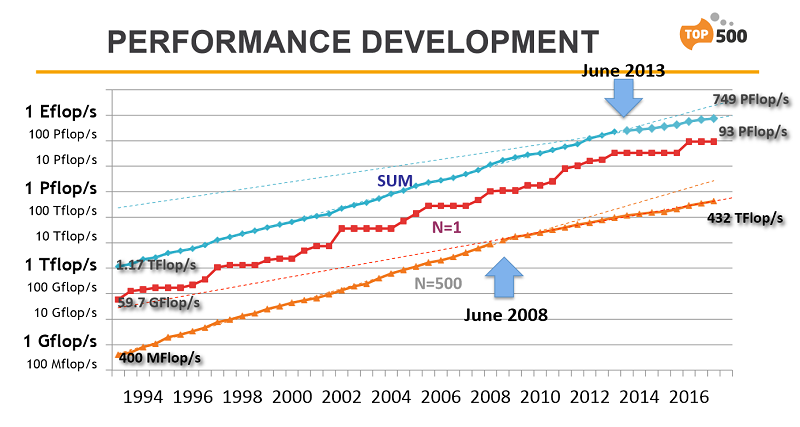

TOP500 aggregate performance (blue), top system performance (red), and last system performance (orange). Credit Erich Strohmaier

That said, there are a few smoking guns worth considering. An obvious one is Moore’s Law, or rather the purported slowing of Moore’s Law. Performance increases in supercomputer hardware relies on a combination of getting access to more powerful computer chips and putting more of them into a system. The latter explains why aggregate performance on the TOP500 list historically grew somewhat faster than the rate of Moore’s Law.

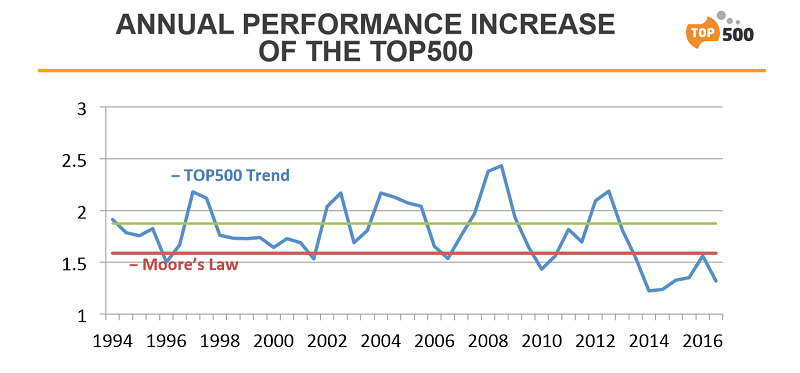

But this no longer appears to be the case. Since 2013 or thereabouts, the annual aggregate performance increase on the TOP500 list has fallen not just below its historical rate of growth, but the Moore’s Law rate as well. As you can see from the chart below, performance growth has had its ups and downs over the years, but the recent dip appears to indicate a new trend.

TOP500 rate of performance increase. Credit Erich Strohmaier

TOP500 rate of performance increase. Credit Erich Strohmaier

So if Moore’s Law is slowing, why don’t users just order bigger system with more servers? Well they are – system core counts are certainly rising – but there are a number of disincentives to simply throwing more servers at the problem. A major limitation is power.

And although power data on the list is sketchier than performance data, there is a clear trend toward increased energy usage. For example, over the last 10 years, the supercomputer with the largest power draw increased from around 2.0 KW in 2007 (ASC Purple) to more than 17.8 KW in 2017 (Tianhe-2). In fact, three of the largest systems today use more than 10 KW. The systems in middle of the list appear to be sucking more energy as well, although the increase is not so pronounced as it is for the biggest systems.

There’s nothing inherently wrong with building supercomputers that chew through tens of megawatts of electricity. But given the cost of power, there just won’t be very many of them. The nominal goal of building the first exascale supercomputers in the 20 to 30 MW range ensures there will be only a handful of such machines in the world.

The problem with using additional electricity is not just that it costs more, and thus there is less money to spend on buying more hardware, but once you grow beyond the power budget of your datacenter, you’re stuck. At that point, you either have to build a bigger facility, wait until the hardware becomes more energy efficiency, or burst some of your workload to the cloud. All of those scenarios lead to the slower performance growth we see on the TOP500.

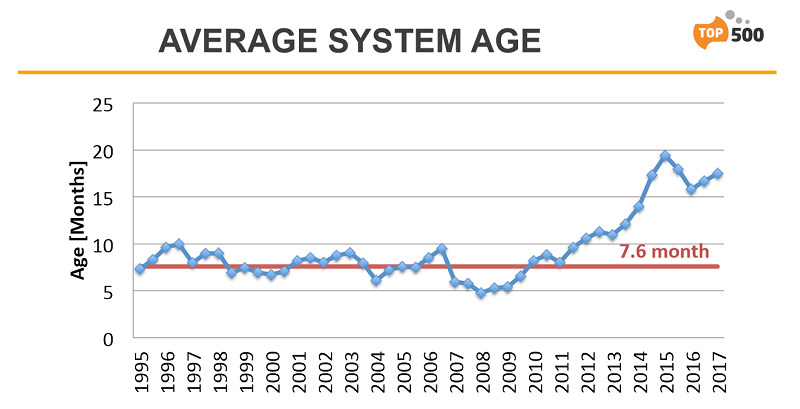

It also leads to reduced system turnover, which is another recent trend that appears to have clearly established itself. Looking at the chart below, the time an average system spends on list has tripled since 2008, and is about double the historical average. It’s almost certain that this means users are hanging on to their existing systems for longer periods of times.

TOP500 of average lifetime of system on list. Credit Erich Strohmaier

None of this bodes well for supercomputer makers. Cray, the purest HPC company in the world, has been seeing some of the effects of stretching out system procurements. Since 2016 at least, the company has experienced a contraction in the number of systems they are able bid on (although, they’ve been able to compensate to some degree with better win rates). Cray’s recent forays into cloud computing and AI are two ways they are looking to establish revenue streams that are not reliant traditional HPC system sales.

Analysts firms Intersect360 Research and Hyperion (formerly IDC) remain bullish about the HPC market, although compared to a few years ago their growth projections have been shaved back. Hyperion is forecasting a 5.8 percent compound annual growth rate (CAGR) for HPC servers over the next five years, but that’s full a point and half lower than the 7.3 percent CAGR they were talking about in 2012. Meanwhile Intersect360 Research is currently projecting a 4.7 percent CAGR for server hardware, while in 2010 they were forecasting a 7.0 percent growth rate (although that included everything, not just servers).

The demand for greater computing power from both researchers and commercial users appears to be intact, which makes the slowdown in performance growth all the more troubling. This same phenomenon appears to be some of what is behind the current trend toward more diverse architectures and heterogeneity. The most popular new processors: GPUs, Xeon Phis, and to a lesser extent, FPGAs, all exhibit better performance per watt characteristics than the multicore CPUs they nominally replace. The interest in the ARM architecture is along these same lines.

Of course, all of these processors will be subject to the erosion of Moore’s Law. So unless a more fundamental technology or architectural approach emerges to take change the power-performance calculus, slower growth will persist. That won’t wipe out HPC usage, any more than the flat growth of enterprise computing wiped out businesses. It will just be the new normal… until something else comes along.