April 3, 2018

By: Chris Downing, Red Oak Consulting

The last 18 months have seen NVMe drives rise to the forefront of high-performance storage technology, becoming the preferred option for anyone with sufficient budget to include them in their procurement. Only a paucity of PCI lanes and the unfortunately timed spike in NAND prices have tempered the growth in these high-end storage sales. But both are temporary problems.

While NVMe drives represent a welcome boost, they have not resulted in a fundamental shift in how systems are designed. The underlying characteristics of the drives themselves are still derived from the need to balance performance, endurance and cost along a fairly well-understood technology roadmap for silicon NAND.

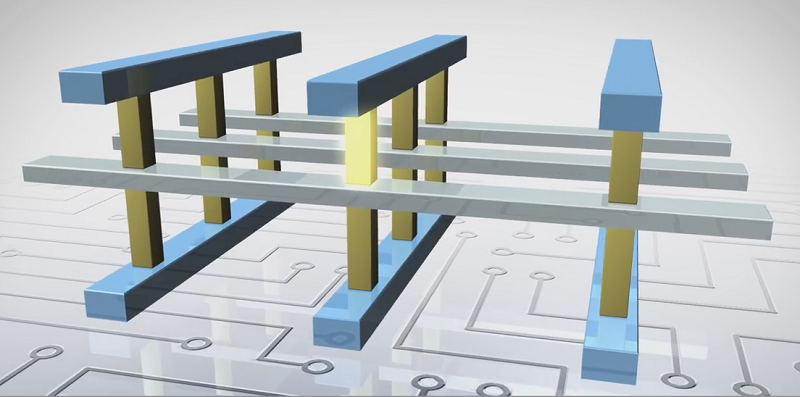

A long-awaited addition to the memory storage hierarchy is currently trickling out into the world in the form of Intel 3D XPoint devices, produced as part of a collaboration with Micron. 3D XPoint can be described as “storage-class memory,” with performance, price and lifespan somewhere between memory and solid-state storage. It is being positioned as either a large-but-somewhat-slow augmentation to the pool of system memory or as a persistent cache sitting above SSDs and HDDs.

A long-awaited addition to the memory storage hierarchy is currently trickling out into the world in the form of Intel 3D XPoint devices, produced as part of a collaboration with Micron. 3D XPoint can be described as “storage-class memory,” with performance, price and lifespan somewhere between memory and solid-state storage. It is being positioned as either a large-but-somewhat-slow augmentation to the pool of system memory or as a persistent cache sitting above SSDs and HDDs.

Although Intel has set out its plans to make DIMM-style 3D XPoint products soon, the storage-centric design sold under the “Optane” brand was the first implementation of the technology to hit the market. Thus far though, the Optane devices which have been offered up for both consumer and commercial use, have been met with overwhelming indifference. While the characteristics are impressive, they are simply not a big enough jump for most system designers to be able to justify the cost, particularly given the small capacities available.

Intel will no doubt be hoping that the arrival of second-generation 3D XPoint, which will enable the DIMM form-factor and additional modes of operation, will turn around the fortunes of their new technology. The hope will be shared by Micron, who is due to make its own products (QuantX) available in a similar timeframe.

Sharpening the edge

Besides the growth in non-volatile memory that 3D XPoint should eventually bring in the server market, another way in which the solid-state storage landscape is likely to change this year is through the expansion of edge computing. As part of this evolutionary shift from “the cloud” to “the fog,” local devices are likely to be tasked with a greater proportion of important computing workloads.

Edge devices will naturally need to be small, low-power and resilient – all properties which suggest that SSDs will be essential rather than just nice-to-have. What form this solid-state storage takes is more nuanced. Where cost is the most important factor, cheap NAND will continue to dominate. But some high-end applications could see low-latency I/O being an important bottleneck, in which case the extra cost for non-volatile memory might be worth it.

Blade servers and the increased use of direct-to-chip cooling will also create synergy with non-volatile memory technologies. Where previously the prospect of a set of high-wattage DIMMs in every chassis might have become a headache (if only due to the increased chassis fan noise), solutions like those on offer from CoolIT, Lenovo and others will soon become even more important. Likewise, pushing more solid-state components into the stack is likely to mean that spinning disks in compute nodes will become a thing of the past, making immersion cooling a more broadly applicable concept - to the dismay of administrators with nice watches everywhere.

Thanks for the memories

With more storage-class memory products only due to arrive in late 2018 or early 2019, it is hard to say what the competitive landscape will look like. Alongside the 3D XPoint and QuantX devices from Intel and Micron will be alternatives from the likes of Samsung, who will be pushing their Z-NAND products, which are based on standard 3D NAND with optimizations and increased endurance.

Vendor lock-in and incompatible usage models are worth watching out for as non-volatile DIMMs (NVDIMMs) become available. 3D XPoint and QuantX are expected to use the “NVDIMM-F” standard, which relies on each NVDIMM being paired with a traditional DRAM DIMM in a different slot. Other vendors may choose “NVDIMM-P” or “NVDIMM-N,” which both use a single DIMM to provide standard main memory with persistence built-in. So far, there have been no public details about how AMD or the ARM vendors will support any of these standards.

In the accelerator space, it is likely that all of the focus for on-device memory and storage will remain on high-bandwidth solutions such as HBM2, though AMD clearly see a route to making slower on-board storage valuable, as shown by their Radeon Pro SSG devices. Whether this architecture could be usefully tweaked to offer advantages in HPC remains to be seen.

Remembering where we were going

The eventual goal of these new memory and storage technologies, at least from the perspective of the HPC division at Intel, is to come together to form the building blocks of DAOS – a software-defined storage project which is expected to replace Lustre as we enter the exascale era. Other vendors have spent the last couple of years building intermediate solutions that leverage the performance benefits of NVMe and the advantages of non-volatile memory without such a steep development curve. Examples such as the “Infinite Memory Engine” cache by DDN and the NVMe-Over-Fabric offering from Excelero, which acts as a distributed burst buffer, should be compatible with non-volatile DIMMs shortly after they appear.

Even though it will be a substantial change from current storage designs, Intel’s DAOS still falls short of the ambition put forward by Hewlett Packard back when they first garnered attention for their interest in memristors. These devices were expected to be the first style of storage-class memory to hit the market, but “The Machine,” which would have had memory completely detached from compute by leveraging silicon photonics, has drifted into obscurity as Hewlett Packard Enterprise has steadily backed off on it ambitions.

It seems then that storage-class memory will not become what was promised – at least not for a while yet. Given the costs faced by anyone trying to incorporate even regular SSDs into their systems, it might be better not to worry about ambition so much and focus on how the technology can be used to solve problems here and now. When trying to talk up performance numbers, Intel highlight that 3D XPoint favours low queue depths, which are a better match for real workloads than the larger queues used in most synthetic storage tests for SSDs. Figuring out how much benefit your applications might see is a problem ripe for some benchmarking. While the extra hassle might be tedious, usage-driven benchmarks are a decision-support mechanism people will need to return to anyway now that Intel is no longer the only game in CPU town. With this extra challenge to face as well as that immersion cooling goop, HPC teams had better roll up their sleeves…

About the Author

Chris Downing joined Red Oak Consulting @redoakHPC in 2014 on completion of his PHD thesis in computational chemistry at University College London. Having performed academic research using the last two UK national supercomputing services (HECToR and ARCHER) as well as a number of smaller HPC resources, Chris is familiar with the complexities of matching both hardware and software to user requirements. His detailed knowledge of materials chemistry and solid-state physics means that he is well-placed to offer insight into emerging technologies. Chris, Senior Consultant, has a highly technical skill set working mainly in the innovation and research team providing a broad range of technical consultancy services.

To find out more, go to www.redoakconsulting.co.uk.