July 25, 2016

By: Michael Feldman

Network latency and bandwidth often turn out to be choke points on application performance for many HPC codes. As a result, the network component for HPC systems has successfully resisted the trend toward general-purpose solutions, Ethernet notwithstanding. Such an environment is conducive to greater innovation and experimentation, as is exemplified in EXTOLL’s network technology

EXTOLL, a German-based company that offers a switchless network aimed at the upper ranks of high performance computing, is a spin-out from the University of Heidelberg. The now five-year-old company has a number of products, one of which is being trialed at DEEP-ER, a European Union (EU) project that is exploring exascale computing.

EXTOLL, a German-based company that offers a switchless network aimed at the upper ranks of high performance computing, is a spin-out from the University of Heidelberg. The now five-year-old company has a number of products, one of which is being trialed at DEEP-ER, a European Union (EU) project that is exploring exascale computing.

TOP500 News got the opportunity to ask the EXTOLL brain trust about what makes their network technology so unique and to explain the latest developments at the company. Offering their perspectives here are the three company co-founders: Managing Director and CTO Mondrian Nuessle, Senior Scientist Ulrich Brüning, and Managing Director Ulrich Krackhardt.

TOP500 News: Can you briefly describe the advantages of EXTOLL’s network technology compared to other lossless network fabrics like InfiniBand and Omni-Path?

Ulrich Krackhardt: The EXTOLL technology has been genuinely developed for the demands of HPC. All hardware and software components have been optimized in a holistic manner. Hence, the EXTOLL technology is highly efficient.

As a direct network, EXTOLL does not require central switches, which consume performance, power, cooling and space budget. The lack of central switches also implies lower CapEx and a linear scaling of costs.

InfiniBand was originally designed for mass storage applications but not for the specific demands of HPC. Therefore, there is unused performance budget by design of InfiniBand. The costs for the central switches rise significantly with the size of the network. Consequently, the cost advantage of EXTOLL over InfiniBand-based networks scales with the size of the network.

Mondrian Nuessle: On the performance side, EXTOLL implements a fast link-level retransmission protocol, which can help a lot in demanding applications. As a result, the inevitable bit errors on high-speed links have very low impact on system performance, and much less impact then end-to-end retransmissions or forward error correction schemes, which add significant latency to every network hop.

EXTOLL also was designed to always have a relatively low memory impact with good scaling, for example, there are no queue pairs for every connection in the network. Additionally, native multicast, global low-skew interrupts, hardware barrier sychronization, adaptive routing capabilities, very low latency and an efficient network protocol also add to the advantages EXTOLL provides.

Since Omni-Path is very similar to InfiniBand in many ways, the story is pretty much the same as for InfiniBand.

TOP500 News: In your TORMALET product, you recently added support for PCIe-attached accelerators so that they can be integrated into the network on host-less nodes. What was added to the technology to enable this capability?

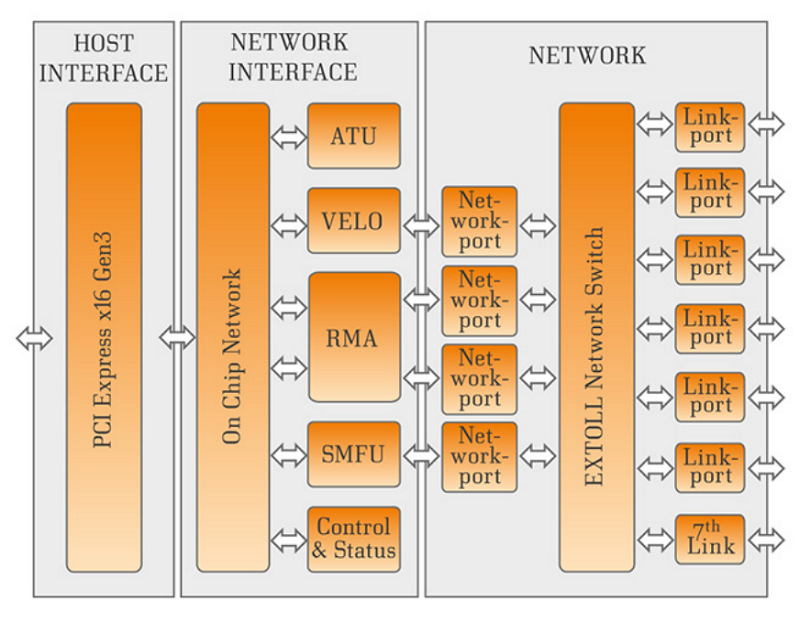

Ulrich Krackhardt: The EXTOLL network technology is embodied in the current ASIC, named TOURMALET. This ASIC has a PCI root complex and can thus provide a PCI root port. By this, TOURMALET plays the role of a host – including boot up – to any hardware that is attached to it by means of a PCIe link.

The recent progress we made is on the software side in the sense that we managed to write an appropriate driver for the GPU interaction. As you can imagine, there is little documentation for using GPUs that way and it took us a while to sort it out. I’m not sure whether all GPU engineers are aware of this way of running GPUs directly in a network.

TOP500 News: What is the rationale for host-less accelerator support?

Ulrich Krackhardt: A single node consists of an EXTOLL NIC and an accelerator, for example, an Intel Xeon Phi, a GPU or an FPGA. Making obsolete the host per node renders multiple advantages, including reduced CapEx, reduced operating costs due to energy savings for both operation and cooling, and denser packaging, which saves valuable space or, alternatively, allows more efficient use of available space.

Such nodes can form a booster cluster of, say, N accelerator nodes. If you think of a second cluster comprising, say M, scalar nodes, then scalar compute power is locally decoupled from vector compute power. Hence, any number of accelerator nodes may be assigned to any number of scalar nodes. This introduces a yet unknown flexibility.

For best performance and highest flexibility, both networks should be EXTOLL-type networks, which was the approach used in the EU’s DEEP-ER project, but this is not mandatory, as was the case for previous DEEP project.

The special features of the EXTOLL network technology opens up game-changing opportunities especially in view of accelerated computing. The EXTOLL ASIC TOURMALET, as well as the PCIe board, provide a 7th link that offers a break-out feature: an accelerator can be attached to a NIC, while at the same time maintaining the regular network topology.

TOP500 News: How did you get around the need for external switches with the TOURMALET adapters?

Mondrian Nuessle: Each TOURMALET adapter features connectors for 6 external links on a standard PCIe card. These 6 connectors can be used to connect the adapters of a system in a direct topology. A natural choice for 6 links per node is the well-known 3D torus topology. Furthermore, each TOURMALET includes a non-blocking, advanced crossbar switch which routes packets from the host to one of the outgoing links, routes packets from incoming links to the host, as well as routing packets from one incoming link to another outgoing link.

By the way, the architecture is not limited to 3D torus networks, other topologies can be built and are supported by the internal router – of course with the constraint that the degree of each node is limited to a maximum of 6 link connections.

Source: EXTOLL

Source: EXTOLL

TOP500 News: To what extent does this network scale as far as the number of nodes? Will it be suitable for exascale systems?

Ulrich Brüning: The TOURMALET ASIC implements distributed table-based routing. The descriptors are 16 bits wide, thus limiting the number of nodes to 64K. However, this is not an architectural limit but was chosen for the TOURMALET hardware implementation. Future generations of EXTOLL hardware may increase the maximum network size.

Using the EXTOLL TOURMALET ASIC in 64K nodes would allow you to assemble a 0.2 exaflops FP double precision supercomputer today, using processors like Intel’s Knights Landing Xeon Phi. The maximum distance within the network will be around 64 hops and a single hop is optimized to a 60ns switch delay. Increasing the FP performance by a factor of five in the next few years and/or increasing the network size would make exascale computing with EXTOLL network technology possible.

TOP500 News: What’s your business strategy as far as bringing your product to market?

Ulrich Krackhardt: If you introduce a new technology, the first thing you have to do is to prove that it is reliable and that it renders the performance as promised and, last but not least, that applications really benefit from it at the end of the day.

Therefore, we are very happy and highly appreciate the willingness of some real first-mover customers for implementing and testing our technology on a larger scale. A very well-documented project in this sense is the EU DEEP project and its successor, DEEP-ER, at the Jülich Super Computing Centre (JSC).

For industrial customers, we are establishing relationships with system manufacturers and integrators, as well as manufacturers and vendors of accelerators. For business that is clearly driven by technical or scientific aspects, we provide direct contact to the customers.

We offer EXTOLL technology in different ways: As a PCI-Express plug-in board, and as a standalone chip for board integration, including design-in service and as design-IP, that is, for implementing into CPU designs.

TOP500 News: What’s next for EXTOLL?

Ulrich Krackhardt: Recently, EXTOLL has added an IP business as a new branch, since EXTOLL has proven its know-how and capability of designing and taping out complex ASICs. So we now offer both licensing of IP design, as well as creating IP by contract work, provided it is sufficiently compatible to the IP field EXTOLL is working on for its own business.

Mondrian Nuessle: We also work on form factors and integration methods different from a single standard PCIe adapter, enabling different, denser systems connected by the EXTOLL networking technology.

As we believe that systems need to become denser and more enery efficient as we move torwards exascale, EXTOLL is improving and further developing its GreenICE technology, the innovative two-phase immersion cooling system we developed, which can enable unprecedented, extremely densely packed and efficient systems.