May 18, 2017

By: Michael Feldman

In a blog post, penned by Google veterans Jeff Dean and Urs Hölzle, the company announced it has developed and deployed its second-generation Tensor Processing Units (TPUs). The newly hatched TPU is being used to accelerate Google’s machine learning work and will also become the basis of a new cloud service.

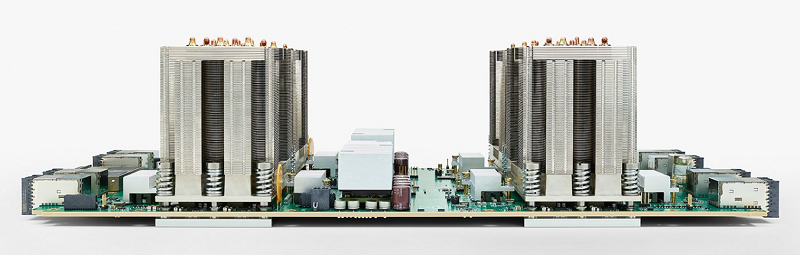

Second-generation TPU Board. Source: Google

Second-generation TPU Board. Source: Google

Machine learning software has become the lifeblood of Google and its hyperscale brethren. Everything from text search, to language translation, to ad serving, is now powered by the neural network models built with these applications. Most of the cloud giants are using GPUs to train these models, and either GPUs, CPUs, FPGAs, or some combination thereof to query them, in a process known as inferencing. Google, though, has decided to custom-build its own ASIC for these applications, and its second go at this could have far-ranging effects on the company, as well as the other stakeholders in the machine learning biz.

First, we should say that the search giant has released very little technical information about the new TPU, which is pretty much Google’s modus operandi with regard to hardware. It was just six weeks ago that the company gave a full account of its first-generation TPU, which had been in operation since 2015.

What they did reveal was that the new device can deliver a stunning 180 teraflops of performance. Google has also clustered 64 of these chips together, which they refer to a “TPU pod.” A single pod can deliver up to 11.52 petaflops of machine learning performance.

TPU pod. Source: Google

TPU pod. Source: Google

In Dean’s and Hölzle’s blog, they describe the improvement in training times that the new TPUs have delivered: “One of our new large-scale translation models used to take a full day to train on 32 of the best commercially-available GPUs—now it trains to the same accuracy in an afternoon using just one eighth of a TPU pod,” they write.

Unfortunately, the authors didn’t elaborate on the type of flops they were discussing – 8-bit, 16-bit, 32-bit, or mixed precision. The original TPU was essentially an 8-bit integer processor, which topped out at 92 teraops. The second-generation chip supports floating point operations, most likely using 16-bit and 32-bit operands.

The reason we can say this with some confidence is that, unlike the first-generation TPU, which was aimed squarely at inferencing already trained neural networks, the new TPU is designed for both inferencing and training. And for training, 16-bit and 32-bit floating point math is pretty much mandatory. Given that the old TPU had a highly parallel matrix multiply unit that did 8-bit integer multiply-and-add operations, we can speculate that the new chip has the same sort of unit, but supports 16-bit and 32-bit floating point operations.

If so, the TPU includes something akin to NVIDIA’s Tensor Cores, the deep learning engines inside the V100 Tesla GPU announced last week. One way to look at it is that the Tensor Core feature brought something of the TPU design into the GPU, while the second-generation TPU looks to borrow the GPU’s expanded capacity for floating point math.

The V100, by the way, delivers up to 120 teraflops of mixed precision (16-bit and 32-bit) matrix math. If the TPU’s 180 TPU teraflops are of the same caliber, then Google has surged ahead of the competition, at least in raw flops. Of course, other aspects of the hardware, like memory capacity, bandwidth, cache buffering, and instruction/thread management can significantly affect application performance, so at this point there is no definitive way to know if the TPU truly has a performance edge.

None of this would matter very much to NVIDIA if Google was just using the new TPU for internal needs, as it did with the original chip. But the search giant has decided to make the TPU available to cloud customers as part of its Google Compute Engine service. That means machine learning customers that might have rented NVIDIA GPUs on Amazon Web Services, Microsoft, Azure, IBM’s Bluemix, or elsewhere, now have the option of using Google’s purpose-built solution.

For now, it looks like the TPU only supports the TensorFlow deep learning framework, which makes sense, given that it was originally developed by Google and has been the framework of choice internally. One would assume additional frameworks like Caffe, Torch, Theano, and others will get ported over time, should the TPU attract a critical mass of users.

Since NVIDIA is ramping up its own cloud platform, Google’s TPU cloud will also compete directly with that effort. It should be noted, however, that Google will still offer NVIDIA GPU instances for its cloud customers – at least for now. Thanks to NVIDIA’s considerable effort at building up a software ecosystem for its GPUs over the last decade, there is a vast application and customer base for its hardware. Obviously, that’s something the TPU does not yet have.

To help jumpstart user interest in the new hardware, Google is making 1,000 TPUs available to machine learning researchers for free. The company is also looking for power users who are interested in getting access to entire TPU pods. You can learn more about those programs here.