Aug. 17, 2016

By: Michael Feldman

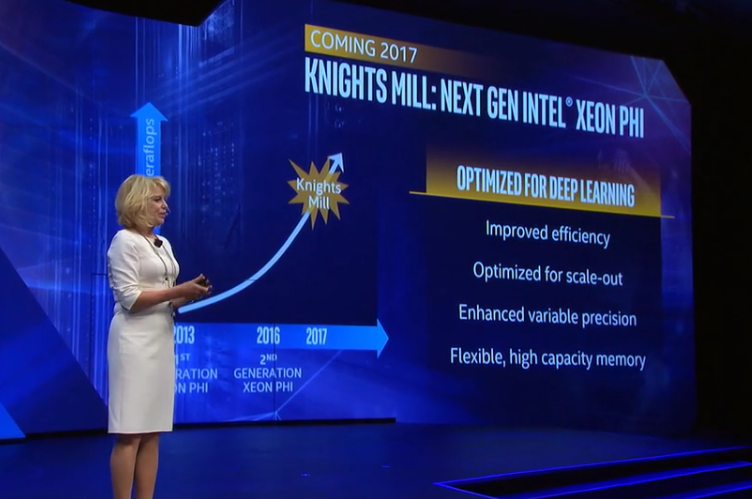

At the Intel Developer Forum (IDF) this week in San Francisco, Intel revealed it is working on a new Xeon Phi processor aimed at deep learning applications. Diane Bryant, executive VP and GM of Intel's Data Center Group, unveiled the new chip, known as Knights Mill, during her IDF keynote address on Wednesday.

Unveiled is probably too strong a word, inasmuch as there were no visuals or specifications provided about the new product, which is scheduled to be released in 2017. Bryant only characterized the design as a “next generation” Xeon Phi that will be optimized for deep learning, an application set that hyperscalers, AI researchers, and others have become so enamored with over the past few years. Knights Mill is not intended to usurp the recently launched Knights Landing, nor replace Knights Hill, its follow-on chip to be built with 10nm transistors. Both of these are still being positioned as more general-purpose manycore processors geared for vector-math-intensive HPC work. Knights Landing was officially launched in June at ISC 2016, during which the chipmaker said it expects to sell around 100,000 units this year alone.

As good as Intel has been at collecting HPC wins, it has been late to the game in deep learning, with NVIDIA taking an early and dominant position in this market with its Tesla GPUs. Yes, Intel Xeons are the most prevalent CPU host in those deep learning clusters, but it’s the GPU co-processors that end up doing the heavy lifting, especially with regard to training the models. For this application set, the NVIDIA GPU has become the de facto processor platform on hyperscale neural networks, with significant deployments at Microsoft, Amazon, Alibaba and Baidu.

NVIDIA’s revenue from deep learning customers currently accounts for about half its datacenter sales, with HPC making up about a third, and the rest going to virtualization (i.e., VDI). To get a sense of what that means in hard numbers, NVIDIA’s datacenter revenue just from the last quarter (Q217) amounted to $151 million.

That level of interest, which has been building over the last several years, is what motivated NVIDIA to start tweaking its GPUs for better performance on deep learning workloads, starting with maximizing single precision (FP32) performance in the Maxwell processors and adding half precision capabilities (FP16) in the Pascal architecture. Unlike HPC, deep learning software is able to leverage these lower precision capabilities to great effect, improving throughput significantly.

Not surprisingly, Intel doesn’t want to cede such a market to a major rival, especially considering its broad ambitions in the datacenter and the availability of a suitable design in Xeon Phi. In fact, when Intel launched its latest rendition of the architecture in Knights Landing, it touted the processor’s deep learning capabilities as part of an effort to blunt some of NVIDIA’s success. During the launch festivities, Intel claimed that the new Xeon Phi could run faster and scale better than GPUs on these workloads. As we reported yesterday, NVIDIA took exception to such claims, especially with regard to the latest Pascal GPU hardware.

Intel’s plans for a deep learning chip reflects some recognition that the company has lost ground to make up in this area. A purpose-built deep learning design is probably a shrewd move, and a recognition of the fact that the Xeon Phi architecture will not be able to outrun its GPU competition from strictly a FLOPS standpoint. As long as the market is big enough to support it, hardware specialization seems like a reasonable approach.

How this plays out in actual features is mostly conjecture, although Intel has already indicated it would be adding low precision circuitry to the chips, just as NVIDIA has done. Perhaps the Knight Mills platform will also include extra memory and bandwidth, using a more generous implementation of the hybrid memory cube (HMC) technology that was introduced with Knights Landing. Intel could even throw some non-volatile 3D XPoint memory into the mix. Having all of these features in a standalone processor that requires no host CPU to operate could be enough to entice some of the hyperscalers out of the NVIDIA camp.

An even more exciting prospect would be to leverage the hardware and software IP from Nervana, a deep learning startup Intel is in the process of acquiring. For what it’s worth, Nervana was working on a custom deep learning processor of its own, which was also scheduled for release in 2017. That chip was supposed to be 10 times faster than a GPU for such work. Whether Nervana’s deep learning circuitry can make it onto the Knight Mills silicon, or is even compatible with it, is unknown. But having this kind of expertise in-house could turn out to be more important than any particular technology it will inherit.

Since 2017 is just around the corner, deep learning enthusiasts shouldn’t have to wait too long to see what Intel comes up with. And by that time, we’re almost sure to see some additional tweaks from NVIDIA in its Pascal lineup, up to and including deep-learning-specific parts, as it did with the Maxwell M40 and M4 GPUs. Which of course is all good news for users of the technology. If you thought this hardware was moving fast before, wait until you see what a little competition brings.