Nov. 28, 2017

By: Michael Feldman

Oak Ridge National Laboratory (ORNL) recently acquired the Atos Quantum Learning Machine (QLM), a quantum computing simulator that lets researchers create qubit-friendly algorithms. The deployment is part of a larger effort at ORNL to develop quantum computing technologies at the US Department of Energy.

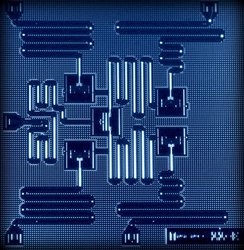

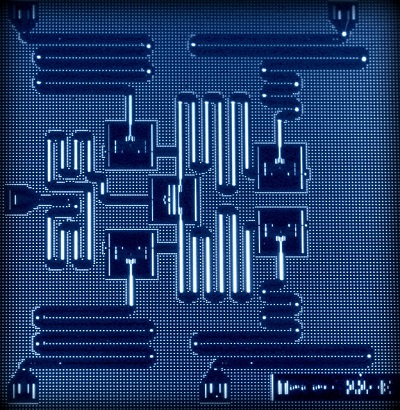

The QLM simulator, which Atos unveiled in July, is a conventional computer that allows developers to write programs that will work on quantum computing hardware once it becomes generally available. The company has characterized the system as “an ultra-compact supercomputer,” although details of the hardware have not been made public. The machine can be programmed in a language called aQasm (Atos Quantum Assembly Language), which enables programmers to write code at the level of quantum gates. Atos is hoping the language become something of a standard for quantum computing once qubit-powered hardware becomes commercially available.

The QLM simulator, which Atos unveiled in July, is a conventional computer that allows developers to write programs that will work on quantum computing hardware once it becomes generally available. The company has characterized the system as “an ultra-compact supercomputer,” although details of the hardware have not been made public. The machine can be programmed in a language called aQasm (Atos Quantum Assembly Language), which enables programmers to write code at the level of quantum gates. Atos is hoping the language become something of a standard for quantum computing once qubit-powered hardware becomes commercially available.

ORNL has helped develop its own quantum computing framework, known as XACC. It uses a host-accelerator model, much like OpenCL or CUDA, which assumes the application will run on a conventional host and offload computationally demanding work to a coprocessor, in this case, a quantum computing device. The framework supports a number of languages and offers a high-level API for doing the quantum computing offload. It is also designed to be hardware agnositic, such that applications can be recompiled to different platforms without modification.

Hardware independence will likely be a critical attribute, given the multitude of quantum computers currently under development. Companies like Google, IBM, Intel, Microsoft, and others are creating their own processors using different technologies and designs. At this point, it's unclear if and when the market will settle on a single platform, but if history is a guide, the early days of quantum computing will be characterized by an array of rapidly evolving architectures.

At this point, both Google and IBM appear to be closing in on launching quantum computing chips with around 50 qubits, a milestone that many believe will deliver “quantum supremacy,” that is, the point at which a conventional digital computer can no longer be able to simulate the equivalent quantum computations. IBM recently announced that it has developed a 50-qubit prototype that it expects to ready for commercial users in the next year or so. Meanwhile, Google has promised a 49-qubit computer will be unveiled before the end of the year. The quantum supremacy milestone may be pushed out a bit, since researchers have recently demonstrated a 56-qubit simulation that could execute on a classical machine, but as more qubits are added, it becomes increasingly difficult to replicate the quantum behavior.

The QLM system offered by Atos is able to simulate up to 40 qubits, although the one acquired by ORNL is actually a 30-qubit machine. But this is not the only technology the lab is using to explore quantum computing. According to ORNL Quantum Computing Institute Director Dr. Travis Humble, he and his colleagues are also collaborating with the quantum computing group at Google, as well as IonQ, a quantum computing startup that is developing systems base on trapped ion technology. In addition, ORNL is using IBM’s Q environment and D-Wave’s latest 2000Q system, both of which are accessed via a cloud service.

Humble says the work is aimed at exploring the landscape of quantum computing devices and developing system software and applications in anticipation of useful commercial systems. In this case, he’s talking about processors on the order of 100 qubits or more, which is far beyond the capabilities of even the largest conventional supercomputers. Humble thinks such machines will be able to advance scientific applications in areas like computational chemistry, material science, climate forecasting, machine learning, and graph analytics, where digital technology is running up against the limits imposed by binary logic gates and memory. Even exascale supercomputers with petabytes of memory won't be able to manage the level of computation necessary for the types of optimization problems envisioned by developers.

One of the fundamental challenges in all this is figuring out if the algorithms are correct once these more powerful quantum computing systems are available. At that point, the software can no longer be verified with simulators on classical hardware, leaving developers without a traditional sanity check. “That kind of freaks me out,” admits Humble.

Such uncertainties have not discouraged the DOE. Although most of its national labs have been working on quantum computing technologies for years, the prospect of commercially available systems is drawing increased investment from the agency, especially on the software side. In September of this year, Lawrence Berkeley National Lab (LBNL) was allocated $3 million per year to develop quantum computing prototypes, applications and tools, while in October, ORNL itself announced it will receive $10.5 million over five years to develop quantum algorithms and assess the feasibility of different architectures for scientific codes. Other labs, including Lawrence Livermore, Sandia, and Argonne have smaller efforts underway.

While those numbers pale to the hundreds of millions of dollars currently spent on conventional supercomputing, the DOE appears to be envisioning a not-too-distant future, where bits and qubits co-mingle in their datacenters. And all of this appears to be reaching fruition in the age of exascale computers. Whether those systems become primarily front-ends for the new qubit machinery or find their own niche where all those flops can be employed to good effect, remains to be seen, but either way, the nature of high performance computing appears to be poised for a quantum leap.